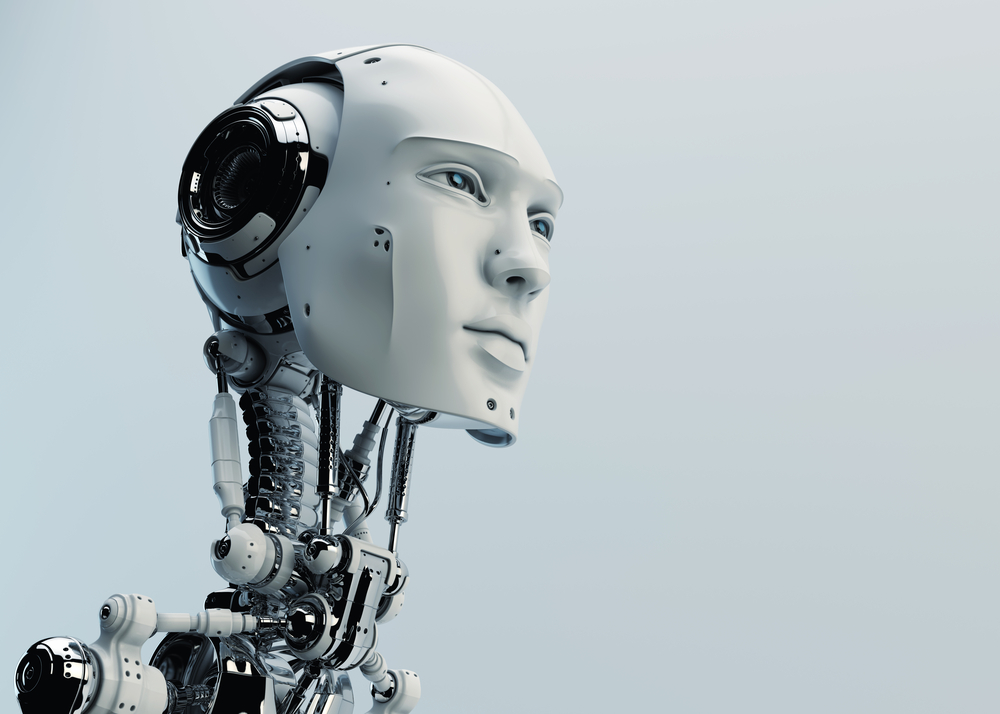

Algocracy would replace politicians with algorithms. Should we try it?

- We are increasingly distrustful of our politicians and are losing confidence in their work. Something needs to change in our politics.

- One suggestion is to introduce an “algocracy” where major governing decisions are steered or even implemented by an algorithm.

- Although there are at least several major problems with the idea, perhaps an algocracy is inevitable.

In the 1960s, 77% of Americans trusted the government to do the right thing most of the time. Today, it’s 20%. In the span of one lifetime, the trust in — and respect for — our politicians has nosedived. Ironically, in a time of incandescent fury and partisan mudslinging, Republicans and Democrats can agree on the fact that government does too little to help “people like you.” We might disagree about a lot, but we’re united in our distrust for The Man.

Clearly, something is broken in our politics. We live in an age of space rockets, nanobots, and CRISPR. The future of science fiction is here. And yet, our political bodies are creaking and straining to keep up. We are using centuries-old systems in a modern, changing world. It’s like having a $2,000 PC and using it to run DOS and play Pong. Surely we can do better.

Algocracy

How could we harness modern technology to improve our politics? What a lot of people don’t appreciate is that a lot of politics is boring. It involves land registrations, transport timetables, legal minutiae, and sifting through densely populated spreadsheets. Outside of the elections and televised speeches, a lot of the actual governing of a country is about logistics. And if there’s one thing that computers do really well, it’s the boring, logistical stuff.

The term “algocracy” has crept into certain strands of political philosophy. It means using computer algorithms and even blockchain technology to take over some (possibly all) the burden of governance. It uses technology to run the country. One Deloitte research paper estimates that, at the conservative end, “automation could save 96.7 million federal hours annually, with a potential savings of $3.3 billion.” That’s money that could build houses, improve schools, and save lives.

The idea is that an algocracy could use data from people’s smart phones or computers to make smart — and very quick — governing decisions. We often complain that politicians are “out of touch.” Yet, with an algocratic government, we’d be constantly wired into government — it’s not quite “digital democracy,” but it’s a governing body that knows, instantly and fully, the data that matter.

It can’t be worse than my lot

There is a body of research that suggests an algocracy is more rational and more efficient than “human bureaucracies” — at least in some political contexts. What’s more, evidence suggests it might even be more popular, too. In 2021, a team from Spain found that 51% of Europeans were in favor of giving a number of parliamentary seats over to algorithms. This number was highest in Spain, Estonia, and Italy, and was lowest in the Netherlands, UK, and Germany. Interestingly, “China and the US hold opposite views: 75% of the Chinese support [having algorithms replace leaders], 60% of Americans oppose it.”

Another paper noted that the more untrusting you are of government, the happier you will be with a computer doing the job. As Spatola and MacDorman put it, “participants who considered political leaders to be unreliable tended to be more accepting of artificial agents as an alternative because they considered them more reliable and less prone to moral lapses.” If you’re dissatisfied with your representative, then how much worse could a computer be?

Algorithmic bias

When we think of algorithms or computational processes, we often think of something like a calculator or a spreadsheet formula: quick, efficient, frill-free data. But when we’re dealing with human data, things get much messier, and much less transparent.

There are at least three major problems with an algocracy.

The first is that it’s often unclear how these algorithms are built. Large tech companies or private industries are often responsible for their programming, and neither are usually very open. In fact, they’re more often very proprietorial. If we want our democracies to be transparent and easily scrutinized, then having politics run by computer scientists behind closed doors isn’t that. As Meredith Broussard puts it in her book, Artificial Unintelligence, “The problems are hidden inside code and data, which makes them harder to see and easier to ignore.”

Second, it’s often the case that once someone gets savvy to an algorithm, it’s not long before they work out how to game it. A paper from the University of Maryland, for instance, revealed how terrifyingly simple it was to fool algorithms at the US patent office — if you want your idea to appear original to an AI, just simply add a hyphen. So, if you like, you could patent an “i-Phone” or a “Mc-Donalds” burger.

Third, we are much more aware of the fact that a detached, unbiased algorithm just doesn’t exist. In 2020, Netflix released a documentary, Coded Bias, where media researcher Joy Buolamwini showed just how poor facial recognition technology performed when analyzing black people. It revealed how far algorithms can reinforce bias in ways we didn’t foresee. Algorithms use data, and there’s more data (and certain types of data) about marginalized, oppressed groups.

This, in turn, further acts as a vicious circle to perpetuate the problem. Virginia Eubanks, in her book Automating Inequality, puts it like this:

“Marginalized groups face higher levels of data collections when they access public benefits, walk through highly policed neighborhoods, enter the health-care system, or cross-national borders. That data acts to reinforce their marginality when it is used to target them for suspicion and extra scrutiny. Those groups seen as undeserving are singled out for punitive public policy and more intense surveillance, and the cycle begins again. It is a kind of collective red-flagging, a feedback loop of injustice.”

Here to stay

Still, none of these problems are unresolvable. They are not fatal blows to the idea of an algocracy. Like the debates surrounding technology more generally, the issues more concern the infancy and immaturity of the technologies. Biases can be ironed out and the flaws can be fixed. Tech companies can be forced to be more transparent. Over time, the algocratic systems will be perfected to the point where they are a viable alternative to our current politicians.

Technology is increasingly encroaching on our society. AI, algorithms, and computers are here to stay, and they’re already an essential part of our leaders’ decision-making processes. Perhaps, then, we’ll simply fall into an algocracy without our realizing it.

Jonny Thomson teaches philosophy in Oxford. He runs a popular account called Mini Philosophy and his first book is Mini Philosophy: A Small Book of Big Ideas.