4 ways artificial intelligence is improving mental health therapy

Upended by a global pandemic, the healthcare sector is finding new ways to adapt quickly and safely. For many, technology has been the key.

In the field of mental health, 84% of psychologists who treat anxiety disorders say there’s been an increase in demand for treatment since the start of the pandemic, according to a survey by the American Psychological Association. That’s up from 74% a year earlier.

Already used in many industries, it is becoming clear that the use of AI within mental health services could be a game-changer for providing more effective and personalized treatment plans. The technology not only gives more insight into patients’ needs but also helps develop therapist techniques and training.

Here are four ways that AI has improved mental health therapy.

1. Keeping therapy standards high with quality control

With an increased demand for services and workloads stretched, some mental health clinics are investigating automated ways to monitor quality control among therapists.

The mental health clinic Ieso is using AI to analyze the language used in its therapy sessions through natural-language processing (NLP) – a technique where machines process transcripts. The clinic aims to provide therapists with a better insight into their work to ensure the delivery of high standards of care and to help trainees improve.

Technology firms have taken note and are providing clinics with the tools to better understand the words spoken between therapists and clients. In the UK and US, software company Lyssn provides clinics and universities with a technology designed to improve quality control and training.

2. Refining diagnosis and assigning the right therapist

AI is helping doctors to spot mental illness earlier and to make more accurate choices in treatment plans.

Researchers believe they can use insights from data for more successful therapy sessions to help match prospective clients with the right therapists and to figure out which type of therapy would work best for an individual.

“I think we’ll finally get more answers about which treatment techniques work best for which combinations of symptoms,” Jennifer Wild, a clinical psychologist at the University of Oxford told the MIT Technology Review.

Furthermore, AI research can hone patient diagnoses into different condition subgroups to help doctors personalize treatment.

Utilizing AI technology, therapists can sift through large amounts of data to identify family histories, patient behaviours and responses to prior treatments, to make a more precise diagnosis and to make more insightful decisions about treatment and choice of therapist.

Machine learning – a form of AI that uses algorithms to make decisions – is also being harnessed to identify forms of post-traumatic stress (PTSD) disorder in veterans.

3. Monitoring patient progress and altering treatment where necessary

Once paired with a therapist, there is a need to monitor patient progress and track improvements. AI can help identify when a treatment change needs to take place or if it’s time for a different therapist.

For example, Lyssn’s uses an algorithm to analyze utterances between therapists and clients to reveal how much time is spent on constructive therapy versus general chit chat during a session to make improvements.

The team at Ieso is also looking into utterances during sessions, focusing just on patients rather than the therapists. In a recent paper, the team identified “change-talk active” responses uttered by clients, such as “I don’t want to live like this anymore” and also “change-talk exploration” where the client is reflecting on ways to move forward and make a change.

The team noted that not hearing such statements during a course of treatment would be a warning sign that the therapy was not working. AI transcripts can also open opportunities to investigate the language used by successful therapists who get their clients to say such statements, to train other therapists in this area.

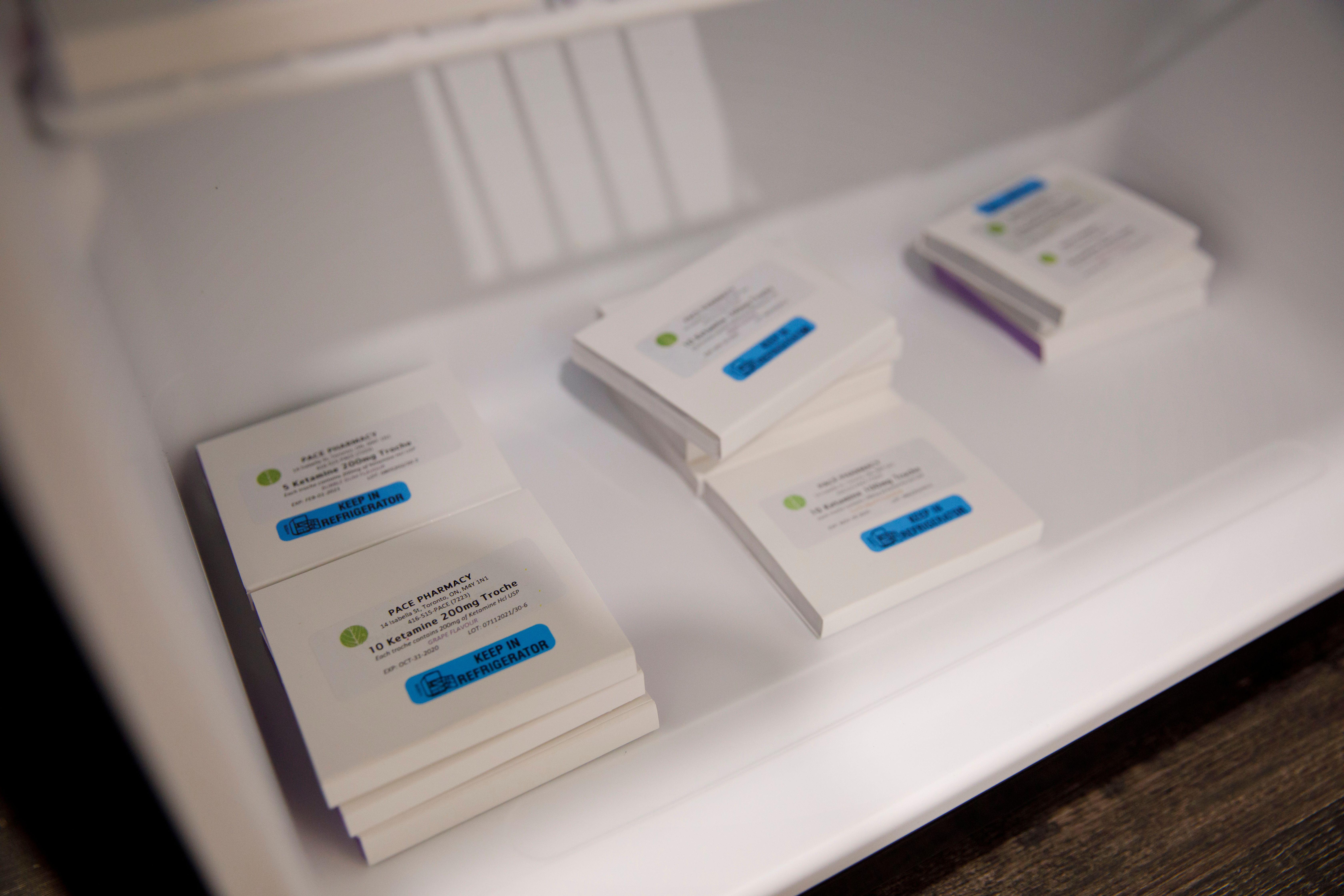

4. Justifying cognitive behavioural therapy (CBT) instead of medication

The use of drugs as a treatment for mental health issues like depression has increased. The number of patients in England that were prescribed antidepressants in the third quarter of 2020-2021 rose 23% compared with the same quarter in 2015-2016, according to the NHS.

However, the UK’s National Institute for Health and Care Excellence (NICE) recently updated its guidelines to encourage the use of CBT before medication for cases of mild depression.

AI can help validate CBT as a treatment, according to researchers from Ieso. In a paper in the JAMA Psychiatry, the researchers used AI to discern phrases used in conversations between therapists and patients.

CBT aims to identify negative thought patterns and to find ways to break them, meaning therapists use statements to discuss methods of change and planning for the future. The researchers concluded that having higher levels of CBT chat in sessions instead of general chat correlated to better recovery rates.

Improvements outside of the clinic

Another avenue where AI is improving mental health therapy is wearable technologies.

In conjunction with in-clinic sessions, therapists are using technologies like the Fitbit to determine ways to improve treatment. For example, mental healthcare providers can monitor a patient’s sleep patterns with a Fitbit instead of relying on them to give accurate reports.

The long-term efficacy of AI in mental health therapy is yet to be thoroughly tested, but the initial results appear promising.

While the use of AI within the mental health ecosystem offers opportunities to improve systems, it also opens up the potential for misuse and mistreatment. As a way of guarding against this risk, the World Economic Forum launched a toolkit to provide governments, regulators and independent assurance bodies with the means to develop and adopt standards and policies that address the ethical concerns relating to the use of disruptive technologies in mental health.

“In mental health, trust is more than the mitigation of risks of unethical and malicious uses, it is working with communities to act responsibly,” Stephanie Allen from Deloitte and Arnaud Bernaert, the Head of Global Health and Healthcare at the Forum wrote in a report. “Not only is this the start of that journey – which will not be easy – but we have a clear medical, moral and economic imperative to do better.”

Republished with permission of the World Economic Forum. Read the original article.