How meaning emerges from matter

- The reductionist view of reality posits that the only phenomena that matter are fundamental particles and their interactions. You are nothing more than an animated pile of carbon atoms.

- Science doesn’t really support this view. For instance, quantum physics has been telling us for a long time that information plays a central role in our understanding of the world.

- Information is inherently meaningful, which suggests that our Universe is built upon meaning.

There is one way to tell the story of the Universe in which meaning doesn’t matter. In this telling, the cosmos begins with the Big Bang and a soup of quantum fields. Each field is associated with a quantum particle. As the Universe expands and cools, these particles combine (or don’t). After a while, you are left mostly with protons, neutrons, electrons, and photons. From then on, the story leads inevitably and inexorably to larger physical structures like galaxies, stars, and planets. On at least one of those planets — Earth — living organisms evolve. Then, in that world and in the heads of one particular kind of creature, neural activity allows for thoughts. Poof! Meaning has appeared.

In this story, meaning is not very important. It’s just an epiphenomenon, an add-on, to all the purely physical and more fundamental things happening with the fundamental particles. It is matter that matters in this tale, not meaning.I am not satisfied with this story. I think it misses some of the most fundamental aspects of our experience of the world. Just as important, it misses what science has been trying to tell us about us and the world together over the last century. There is, I believe, a very different story we can tell about meaning, and it’s a narrative that can rewire how we think about the Universe and our place in it.

Information has meaning

To unpack these rather lofty claims, let me start with something more narrowly focused. Recently, my colleagues and I (at the University of Rochester, Dartmouth, and the University of Tokyo) began a project to explore the role of what’s called semantic information in living systems.

In the midst of computers and cell phones and a zillion other forms of digital technology, we are all familiar with the idea of information. But these miraculous machines are all based on what’s called syntactic information. “Syntax” is used here because this kind of information begins with ideas about a generalized alphabet and asks about the frequency with which characters from that alphabet appear in possible strings (that is, “words”). This is a complicated way of saying syntactic information is about surprise. A zero appearing in an endless string of zeros would not be very surprising and would carry little syntactic information.

Of course, there is a world without us. It’s just not this one.

What’s explicitly missing from this description of information is meaning. That’s on purpose. Claude Shannon, the genius inventor of modern information theory, intentionally excluded discussion of purpose so he could make progress toward his goal, which was understanding how strings of symbols get pushed through communication channels. But in our lived experience (that’s going to be an important term for us, so let’s hold on to it), we intuitively associate information with meaning. So, syntactic information is about the probability of a particular character appearing in a string of characters, while semantic information is about the meaning those characters convey together.

Information is important to us because it means something. There is, explicitly, something to be known, and there is a knower. Gaining information changes things for us. We know more about the world and, because of knowing, we may behave differently. And what’s true for us is true for all life. In the famous process of chemotaxis, cells move up a gradient of nutrients. The gradient means nothing on its own, but to the cell it represents “sensed” information (“food!”) that has valence — that is, importance.

A theory of semantic information

What my colleagues and I are trying to develop (via funding from the John Templeton Foundation) is a theory of semantic information, just like how Shannon developed a theory of syntactic information. The problem, of course, is that “meaning” can be a slippery idea. There is a deep history of trying to understand it in domains like the philosophy of mind and the philosophy of language. While we are keenly interested in the philosophical implications of what we are doing, our job as scientists is to develop a mathematical formalism that can quantify semantic information. And we are doing this based on a beautiful paper by Artemy Kolchinsky (also one of the team leads) and David Wolpert. If we are successful, we might eventually be able to understand how much semantic information there is in any given situation, how it arose, and how much it costs for a system to use (that is, how much energy is associated with the creation, maintenance, and processing of semantic information).

We are just getting started on the work and it’s very exciting. And even though I don’t have any results to tell you about, there is a key aspect of the project which bears, for me at least, on that story I told you about at the beginning of this essay. The most important thing about semantic information, in the theory we’re trying to develop, is distinguishing between the system and the environment. The system could be a cell or an animal or even a social group of animals. We can even go big and think of the system as a city or nation. In all cases, the environment is the “field” from which resources are drawn to maintain the continued existence of the system. In this way, semantic information always comes from the overlap between the system and its environment. For me, thinking about this distinction is where things get freaky and interesting. (I’ll note that my collaborators may not share the perspective I’m about to articulate.)

The fascinating thing about this approach is that it’s not always clear what is the system and what is the environment. The boundaries can be fluid and dynamic, meaning they can change with time. In all cases, there is a way of looking at the problem in which the system and the environment emerge together. This is particularly true if we want to explore the origin of life where the system is explicitly creating itself. From that emergence, or co-creation, comes a very different story about meaning and the cosmos.

The story of a cell

Think about a cell swimming in a bath of chemicals. What makes the cell different from the chemicals? It’s the cell membrane that uses information to decide what to let in and what to keep out. But the membrane must continually be re-created and maintained by the cell from materials in the environment. And yet it’s the membrane which lets the cell decide what constitutes the self (the cell) and what constitutes the outside world (the bath of chemicals).

The bath of chemicals, however, doesn’t know anything on its own. While you and I can imagine the bath with all its different atoms bouncing around and can think of those differences as carrying information, the bath doesn’t distinguish itself. It doesn’t use information at all. Thus, in a very real sense, the bath as a bath with different resources that can be used or not, comes into existence along with the cell. The two are complementary. The cell brings the bath into being as a bath because the bath is meaningful to it. But the bath allows the cell to come into being as well. In this way, the living organism and the world it inhabits create each other.

Now here is the killer point. I am not saying there is no existence before the cell/bath (that is, the system/environment) emerges together. That would be silly. Something must exist in order for the system/environment emergence to be possible. But the environment as such, as a differentiated environment full of this kind of stuff over here and those kinds of things over there, is always paired to a system that makes such differentiation possible via its use of information. Putting this perspective in human terms, the best way to phrase it might be as follows:

Of course, there is a world without us. It’s just not this one.

This world — the one we live through, make stories about, and do science in — can never be separated from our human being. That might seem like a pretty radical idea, but I think it’s much closer to what we actually experience and how science actually works.

The Blind Spot

Next year, the philosopher Evan Thompson, the physicist Marcelo Gleiser, and I will publish The Blind Spot: Experience, Science, and the Search for Reality. We use the metaphor of the human eye’s “blind spot” as something that both allows vision to work but also hides something from vision.

The book’s principal point is that there is a philosophical perspective (a metaphysics) that has been associated with science, but which is different from the process of science itself. What we refer to as “Blind Spot metaphysics” is a constellation of ideas that cannot see the centrality of lived experience. Blind Spot metaphysics holds that science reveals a perfect God’s eye view of the Universe that, in principle, can be completely free of any human perspective or influence. From that God’s eye view, Blind Spot metaphysics claims, we can see that only fundamental particles and their laws really matter. You are nothing but your neurons, and your neurons are nothing but their molecules, and so on, all the way down to a hoped for “Theory of Everything.” In this way, Blind Spot metaphysics takes the useful scientific process of reduction and turns it into a philosophy: reductionism. In this reductionist story, meaning is nothing but arrangements of charge in a network of neurons in the meat computer that is your brain.

But in the new story I believe we can tell, meaning is really about architectures of semantic information, and that is why there is a world at all. In this new story, there is no such thing as God’s eye view. Or, if there is such a view, nothing can be said about it because it lies beyond the perspectival structure that is fundamental to actual lived experience (something the field called phenomenology has explored in great detail). The God’s eye view that Blind Spot metaphysics hopes for is just a story we tell ourselves. In truth and in practice, no one has ever had such a view. No one ever has or ever will because it is, literally, a perspective-less perspective. The philosopher Thomas Nagel called it a “view from nowhere” and it is, literally, meaningless.

So, in the story I believe we can begin formulating now, science is not about reading God’s thoughts or some other version of Platonism. Instead, it’s about unpacking the remarkable dynamics through which system and environment, self and other, agent and world emerge together. It’s a story in which meaning appears as the filigreed, variegated organization of semantic information. That information becomes central to our understanding of ourselves and the Universe because it’s the way to see how that pairing can never be separated.

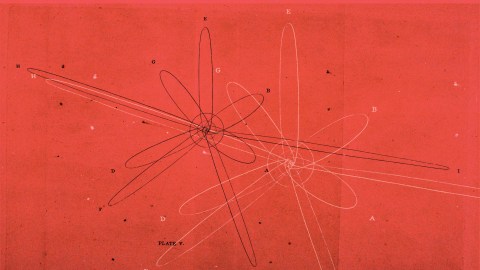

This perspective is not that radical. In many ways, science has been trying to push in this direction for quite a while. If you really want to deal with those fundamental particles that are central to the first story I told you, then you must go through quantum mechanics. But quantum physics puts measurement and information front and center. There is a vigorous ongoing debate about how to interpret that centrality. For quantum interpretations like QBism, the distinction between agent and world becomes a pivot for understanding.

Information and meaning

In the end, this new kind of story, which never lets us push lived experience out of the picture, forces us to a different kind of question about meaning. Rather than ask what meaning is, we must consider where meaning is.

There is an old story about an encounter between Jonas Salk (the inventor of the polio vaccine) and the cyberneticist Gregory Bateson. Bateson asked Salk where the mind was. Salk pointed to his head and gave the reductionist answer, “Up here.” Bateson, who was a pioneer of systems thinking with its emphasis on networks of information flow, swept his arm out in a broad arc, implying, “No, it’s out here.” Bateson was offering a different view of the world, mind, self, and cosmos. In Bateson’s view, all minds are embodied and embedded in dense ecosystems of other living systems beginning with communities of language makers and users and stretching out to the vast microbial environments upon which food webs are grounded.

It is important to note that there is nothing transcendent or “mind-only” in this approach. It’s simply the recognition that what makes life different from other physical systems is its use of information across time. These information architectures, constantly developing, are the result of selection functioning in evolution. As physicist Sarah Walker puts it, “Only in living things do we see path-dependence and mixing of histories to generate new forms; each evolutionary innovation builds on those that came before, and often these innovations interact across time, with more ancient forms interacting with more modern ones.”

Thus, rather than a focus on particles as the only fundamentals, a physics that includes life might take these time-extended information architectures as fundamental too. As Walker suggests, they might be a new kind of “object” that becomes central to a new kind of physics. Such a perspective could take us in some very interesting directions.

We are all rich informational ecologies extending across space and, more importantly, time. The whole of creation, from matter to life and back, is implicated in every one of us, and every one of us is implicated in its structure. And meaning, implicit in matter, is the invisible skeleton supporting it all.