Is It Tough Love Time For Science?

1. Science needs some tough love (fields vary, but some enable and encourage unhealthy habits). And “good cop” approaches aren’t fixing “phantom patterns” and “noise mining” (explained below).

2. Although everyone’s doing what seems “scientifically reasonable” the result is a “machine for producing and publicizing random patterns,” statistician Andrew Gelman says.

3. Gelman is too kind; the “reproducibility crisis” is really a producibility problem—professional practices reward production and publication of unsound studies.

4. Gelman calls such studies “dead on arrival,” but they’re actually dead on departure, doomed at conception by “flaws inherent in [their] original design” (+much that’s “poorly designed” gets published).

5. Optimists say relax, “science is self-correcting.” For instance, Christie Aschwanden says the “replication crisis is a sign that science is working,” it’s not “untrustworthy,” it’s just messy and hard (it’s “in the long run… dependable,” says Tom Siegfried).

6. “Science Is Broken” folks like Dan Engber ask, “how quickly does science self-correct? Are bad ideas and wrong results stamped out [quickly]… or do they last for generations?” And at what (avoidable) cost?

7. We mustn’t overgeneralize—physics isn’t implicated, instructively it’s intrinsically less variable, (all electrons behave consistently). Biology and social science aren’t so lucky: People ≠ biological billiard balls.

8. Richard Harris’s bookRigor Mortis argues “sloppy science… wastes billions” (~50% of US taxpayer-funded biomedical research budget, ~$15 billion squandered).

9. Harris blames ultra-competitive “publish first, correct later” games, and heartbreakingly abysmal experimental design, that can threaten lives(Gelman concurs, “Clinical trials are broken.”).

10. Harris sees “no easy” fix. But a science-is-hard defense doesn’t excuse known-to-be-bad practices.

11. Engber’s “bad ideas and wrong results” are dwarfed by systemic generation-spanning method-level ills. For instance, Gelman calls traditional statistics “counterproductive”—badly misnamed “statistical significance” tests aren’t arbiters “of scientific truth,” though they’re widely used that way.

12. Psychology brought “statistical significance” misuse to light recently (e.g.,the TED chart-topping “power pose”), but Deirdre McCloskey declared “statistical significance has ruined empirical… economics” in 1998, and traced concerns to 1920s. Gelman wants us to “abandon statistical significance.”

13. Yet “noise mining” abounds. Fields with inherent variability, small effects, and noisy measurements drown in datasets with phantom patterns, unrelated to stable causes (see Cornell’s “world-renowned eating… expert”)

14. No “statistical alchemy” (Keynes, 1939) can diagnose phantom patterns. Only further reality-checking can. “Correlation doesn’t even imply correlation” beyond your data. Always ask: Why would this pattern generalize? By what causal process(es)?

15. Basic retraining must emphasize representativeness and causal stability. Neither bigger samples, nor randomization necessarily ensure representativeness (see, mixed-type stats woes, pattern types).

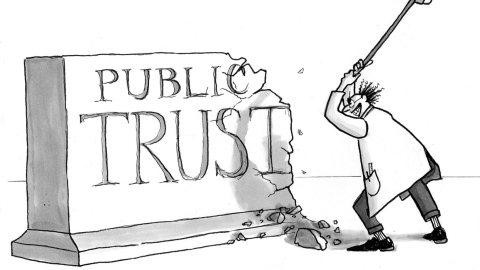

16. Journalism that showcases every sensational-seeming study ill-serves us. Most unconfirmed science should go unreported—media exaggerations damage public trust.

17. Beyond avoidable deaths, and burned dollars, there’s a substantial “social cost of junk science” (e.g., enabling the science is “bogus” deniers).

18. Great science is occurring, but the “free play of free intellects” game, fun though it is, is far from free of unforced errors.

19. “Saving science” (Daniel Sarewitz) means fixing the game—not scoring points within it.

Illustration by Julia Suits, The New Yorker cartoonist & author of The Extraordinary Catalog of Peculiar Inventions