Why Cosmology’s Expanding Universe Controversy Is An Even Bigger Problem Than You Realize

The Universe is expanding, but different techniques can’t agree on how fast. No matter what, something major has got to give.

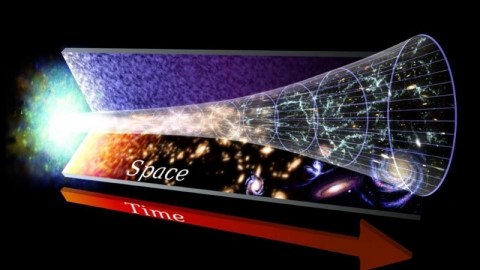

Look out at a distant galaxy, and you’ll see it as it was in the distant past. But light arriving after, say, a billion-year journey won’t come from a galaxy that’s a billion light years away, but one that’s even more distant than that. Why’s that? Because the fabric of our Universe itself is expanding. This prediction of Einstein’s General Relativity, first recognized in the 1920s and then observationally validated by Edwin Hubble several years later, has been one of the cornerstones of modern cosmology.

The value of the expansion rate, however, has proven more difficult to pin down. If we can accurately measure it, as well as what the Universe is made out of, we can learn a whole slew of vital facts about the Universe we all inhabit. This includes:

- how fast the Universe was expanding at any point in the past,

- how old the Universe is since the first moments of the hot Big Bang,

- which objects are gravitationally bound together versus which ones will expand away,

- and what the ultimate fate of the Universe actually is.

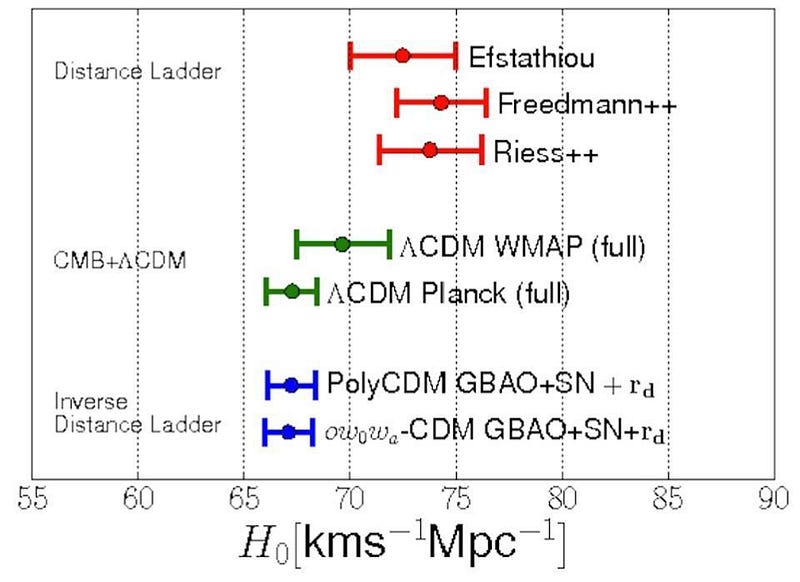

For many years now, there’s been a controversy brewing. Two different measurement methods — one using the cosmic distance ladder and one using the first observable light in the Universe — give results that are mutually inconsistent. While it’s possible that one (or both) groups are in error, the tension has enormous implications for something being wrong with how we conceive of the Universe.

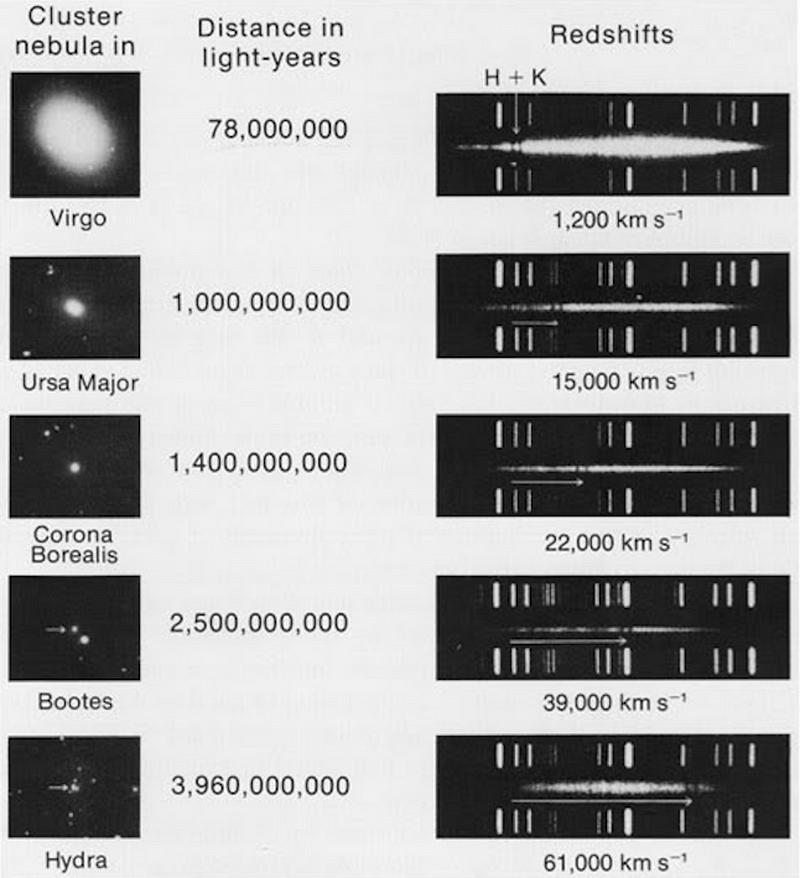

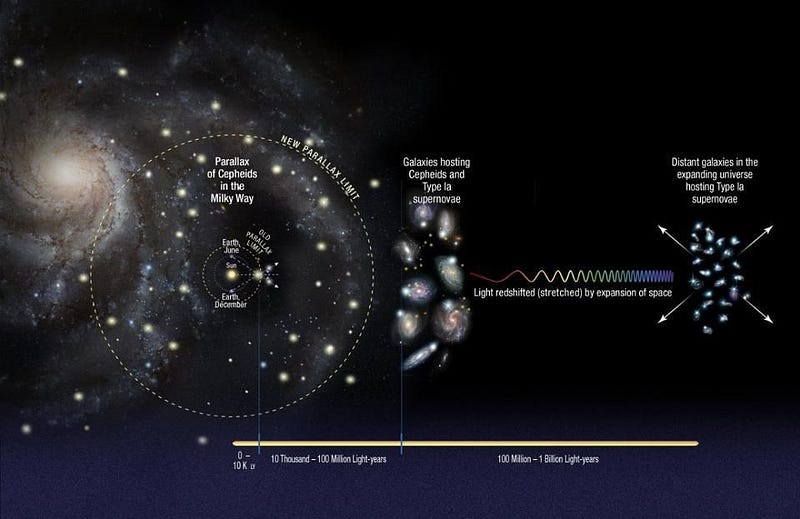

If you want to know how fast the Universe is expanding, the simplest method goes all the way back to Hubble himself. Just measure two things: the distance to another galaxy and how quickly it’s moving away from us. Do that for all the galaxies you get your hands on as a function of distance, and you can infer the modern expansion rate of the Universe. In principle, this is extremely simple, but in practice, there are some real challenges.

Measuring the recession speed is easy: light gets emitted with a specific wavelength, the expansion of the Universe stretches that wavelength, and we observe the stretched light as it arrives. From the amount it’s stretched, we can infer its speed. But to measure distance requires an intrinsic knowledge of what we’re measuring. Only by knowing how absolutely, intrinsically bright an object is can we infer, from the brightness we observe, how far away it truly is.

This is the concept of the cosmic distance ladder, but it’s very risky. Any errors we make when we infer the distances to nearby galaxies will compound themselves when we go to greater and greater distances. Any uncertainties in inferring the intrinsic brightness of the indicators we observe will propagate into distance errors. And any mistakes we make in calibrating the objects we’re trying to use could bias our conclusions.

In recent years, the most important astronomical objects for this method are Cepheid variable stars and type Ia supernovae.

Our accuracy is limited by:

- our understanding of Cepheids, including their pulsing period and luminosity,

- the type of Cepheid that they are,

- the parallax measurements to the Cepheids,

- and the knowledge of the environments in which we observe them.

While there are still substantial uncertainties we’re working on understanding, the best value for the expansion rate from this method, H_0, is 73 km/s/Mpc, with an uncertainty of less than 3%.

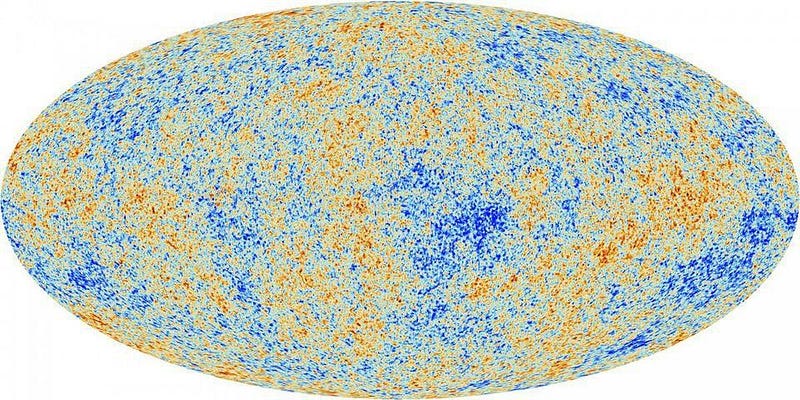

On the other hand, there’s a second method: using the light left over from the Big Bang, which we see today as the Cosmic Microwave Background. The Universe began as almost perfectly uniform, with the same density everywhere. However, there were tiny imperfections in the energy density on all scales. Over time, the matter and radiation interacted, collided, all while gravitation worked to attract more and more matter into the regions of greatest overdensity.

As the Universe expanded, however, it cooled, as the radiation within it redshifted. At some point, it reached a low enough temperature that neutral atoms could form. When the protons, atomic nuclei, and electrons all became bound into neutral atoms, the Universe became transparent to that light. With the signal of all those interactions now imprinted on that light, we could use those temperature fluctuations on all scales to infer both what was in the Universe and how quickly it’s expanding.

The results are known to an extraordinarily precise accuracy, allowing us to infer both what the Universe is made out of and how quickly it’s expanding. While it’s usually a more notable conclusion to learn that our Universe is rich in dark matter and dark energy, we learn the expansion rate as well: H_0 = 67 km/s/Mpc, with an uncertainty of about ±1 km/s/Mpc on that.

This is, potentially, a very big problem. There are many potential solutions, like one group has a systematic error they haven’t accounted for. It’s possible that there’s something different going on in the distant Universe from the nearby Universe, meaning both groups are correct. And it’s possible that the answer is somewhere in the middle. But on a cosmic scale, if the results from the distant Universe are incorrect, we’re in a lot of hot water.

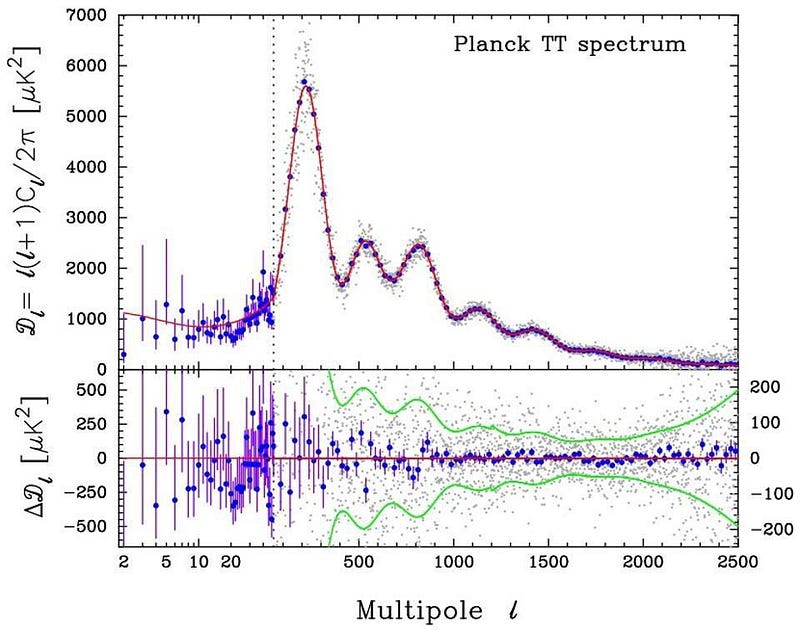

The cosmic microwave background contains an incredible amount of information in it. Since the Planck satellite released their first results, we’ve been able to extract a tremendous amount of that information. Fortunately (or unfortunately, depending on how you look at it), many of the extracted parameters that have wiggle-room are tied to other parameters that can be constrained by other means.

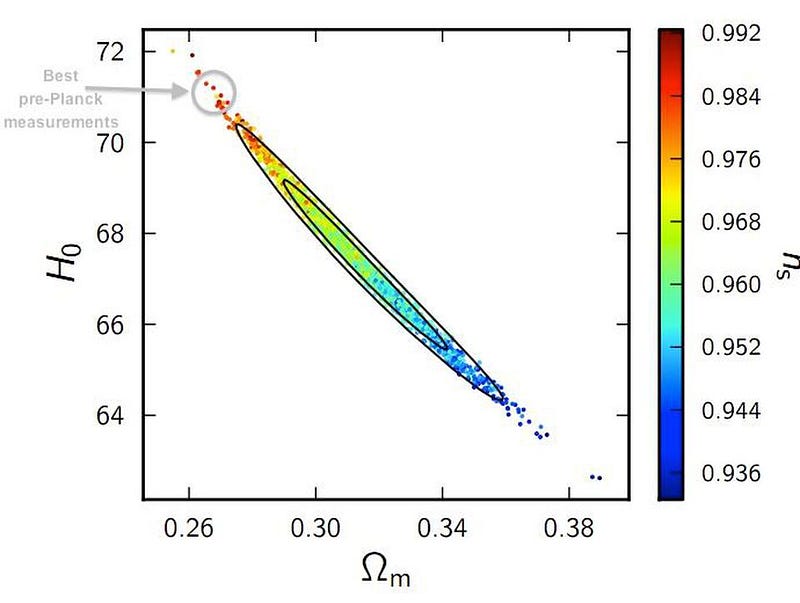

The Hubble constant, the matter density, and the scalar spectral index (which describes the overdensities and underdensities in the Universe) are one example of such related parameters. The problem is that you can’t change one without changing the others.

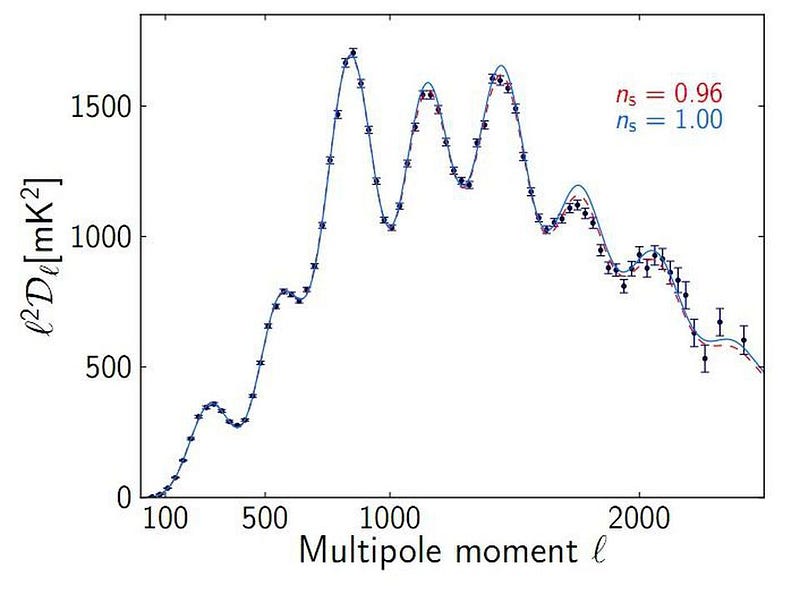

We have measurements of these parameters that are very accurate from other sources besides the cosmic microwave background alone. Baryon acoustic oscillations and the large-scale structure of the Universe, for example, place very tight constraints on both the matter density and the scalar spectral index; we know the former has to be between about 28–35% and the latter has to be equal to about 0.968 ± 0.010.

But if the Planck team is wrong about the expansion rate of the Universe and the distance ladder team is correct, then the Universe would have too little matter (to the tune of about 25%) and would have too high a spectral index (at about 0.995) to be consistent with observations. The spectral index, in particular, would be demonstrably in tremendous conflict. That tiny difference, from say, 0.96 to 1.00, is irreconcilable with the data.

The question of how quickly the Universe is expanding is one that has troubled astronomers and astrophysicists since we first realized that cosmic expansion was a necessity. While it’s incredibly impressive that two completely independent methods yield answers that are close to within less than 10%, the fact that they don’t agree with each other is troubling.

If the distance ladder group is in error, and the expansion rate is truly on the low end and near 67 km/s/Mpc, the Universe could fall into line. But if the cosmic microwave background group is mistaken, and the expansion rate is closer to 73 km/s/Mpc, we just may have a crisis in modern cosmology.

The Universe cannot have the dark matter density and initial fluctuations that such a value would imply. Until this puzzle is resolved, we must be open to the possibility that a cosmic revolution may be on the horizon.

Ethan Siegel is the author of Beyond the Galaxy and Treknology. You can pre-order his third book, currently in development: the Encyclopaedia Cosmologica.