The Brightest Supernovae Of All Have A Suspiciously Common Explanation

All supernovae are not created equal. After a 14 year investigation, the brightest ones have a surprising explanation.

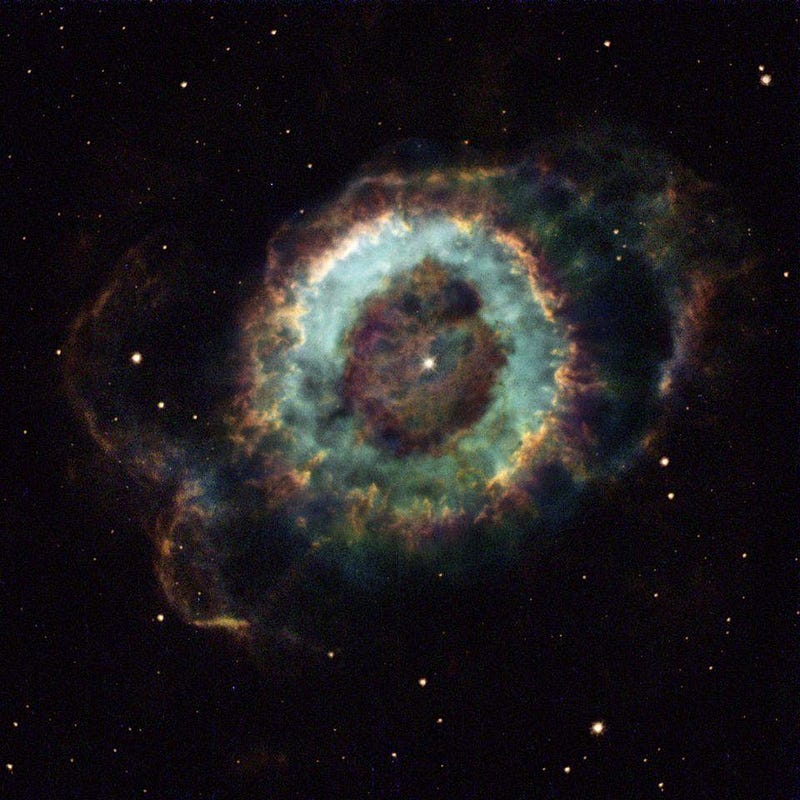

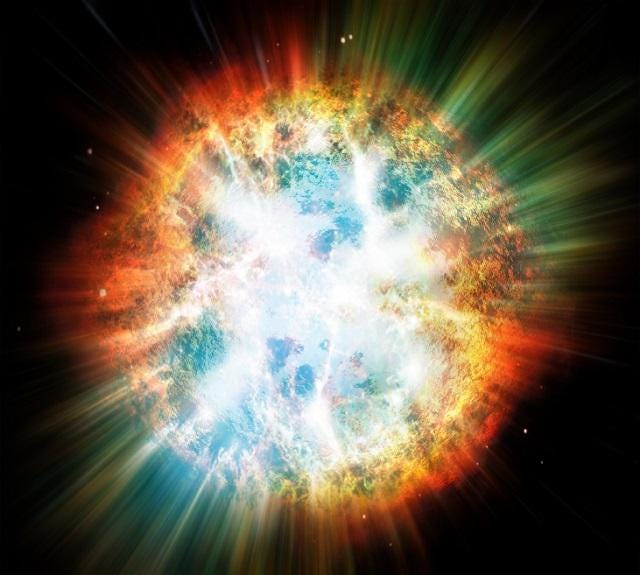

In 2006, astronomers witnessed a supernova that defied conventional explanation. Typically, supernovae arise either from the collapse of a massive star’s core (type II) or from a white dwarf that’s accumulated too much mass (type Ia), where in either case they can reach a peak brightness that’s some 10 billion times as luminous as our own Sun. But this one, known as SN 2006gy, was superluminous, radiating 100 times more energy than normal.

For more than a decade, the leading explanation was thought to be the pair-instability mechanism, where energies inside the star rise so high that matter-antimatter pairs are spontaneously produced. But a new detailed analysis, published in the January 24, 2020 issue of Science magazine, scientists reached a shocking conclusion: this was probably a fairly typical type Ia supernova simply occurring under odd conditions. Here’s how they got there.

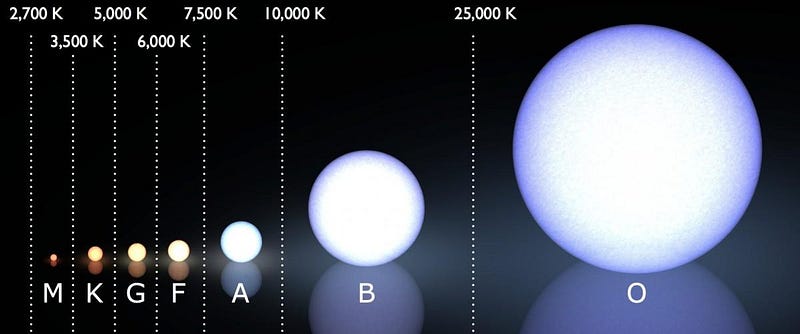

Although stars might seem like they’re incredibly complicated objects, with gravity, nuclear fusion, complex fluid flow, energy transport, and magnetized plasmas all playing a role, their life cycles and fates typically boil down to just one major factor: the mass they’re born with. When a cloud of gas that’s collapsed under its own gravity becomes dense, hot, and massive enough, it ignites nuclear fusion in its core, beginning with a chain reaction that fuses hydrogen into helium.

The more massive a star is, the larger and hotter the region of the core where fusion occurs will be. It’s no surprise, then, that the coolest, lowest-mass stars in the Universe, including red dwarfs like Proxima Centauri, emit less than 0.2% the light of our Sun and can take trillions of years to burn through their fuel. On the other end of the spectrum, the most massive known stars, hundreds of times as massive as our Sun, can be millions of times as luminous and will burn through their core’s hydrogen in just 1 or 2 million years.

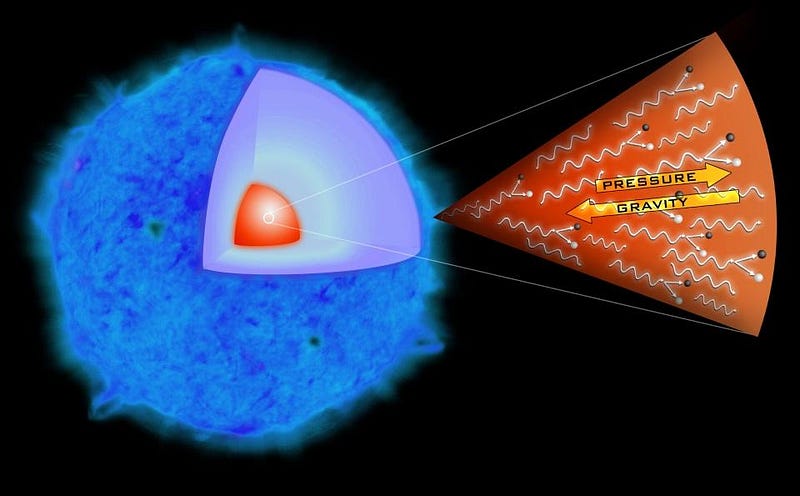

When the core of a star runs out of hydrogen, the radiation pressure that was produced by fusion begins to drop. This is bad news for the star in some sense, as all that radiation was necessary to hold the star up against gravitational collapse. Based on how quickly the star contracts for its mass, and how slowly the heat is able to escape through the outer layers, contraction makes the core heat up, where — if it crosses a particular threshold — new elements can begin fusing.

Red dwarf stars never get hot enough to fuse anything beyond hydrogen, but Sun-like stars will heat up to fuse helium in their core, while the outer layers are pushed outward to turn the star into a red giant. When Sun-like stars, which represent all stars between about 40% and 800% the mass of our Sun, run out of helium fuel, their cores will contract down into white dwarfs made largely of carbon and oxygen, while their outer layers get blown off into the interstellar medium.

Meanwhile, the most massive stars will have their cores contract down to such high temperatures that carbon — the end result of helium fusion — can begin fusing into heavier elements still. In a sequence, carbon fusion will give way to stars fusing neon, oxygen, and eventually silicon and sulfur, leading to a core that’s rich in iron, nickel, and cobalt. Those elements are the end of the line, and when silicon and sulfur fusion end, the core collapses and a type II supernova occurs.

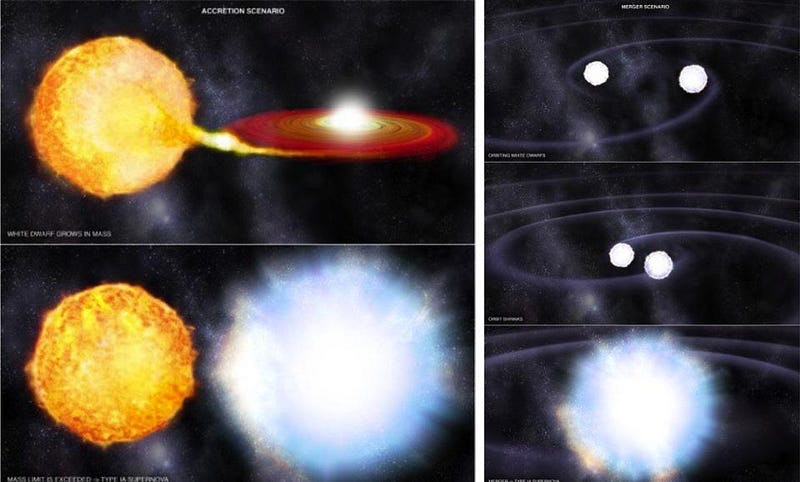

On the other hand, stars that end their lives as white dwarfs will get a second chance: if they either accrete enough mass or merge with another object, they can cross a critical threshold that will also lead to a different class of supernova known as a type Ia supernova. All supernovae are thought to arise from one of these two mechanism, with the only differences dependent on which elements are either present, absent, or were once present but were later stripped from the star at some point in the past.

When it comes to the specific case of superluminous supernovae, such as SN 2006gy, many scenarios have been envisioned to explain them. Initially touted as the brightest stellar explosion ever seen, numerous others seen this century have rivaled or even exceeded it, but it was still classified as a type II supernova due to the hydrogen spectral lines observed in its light. At just 238 million light-years away, SN 2006gy is the closest superluminous supernova ever seen.

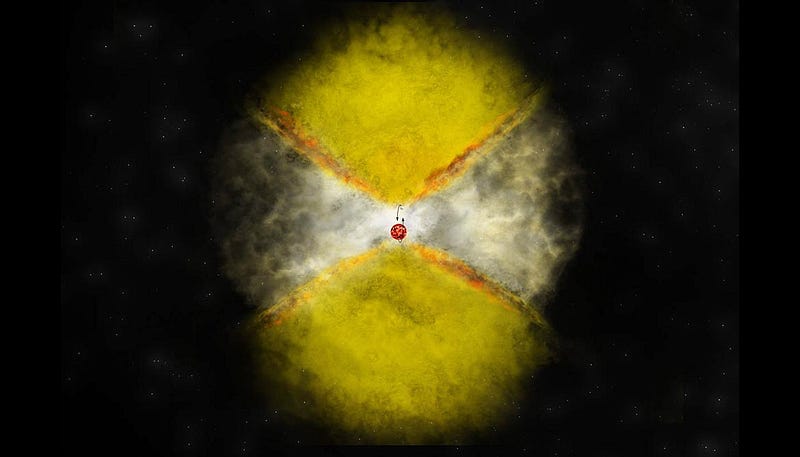

Prior ideas all involved a very massive star that had already experienced eruptive events that created a large amount of material around the star, similar to what’s occurring in our own galaxy with Eta Carinae. A luminous blue variable could have ejected such material, as could a star that pulses due to an intrinsic variation. But traditionally, the most conventional explanation for a cataclysm like this has been the pair-instability mechanism.

The idea of the pair-instability mechanism is that the energies inside the core of a star rise so high that individual photons and collisions between particles are great enough that there’s enough energy, E, for new particle-antiparticle pairs of electrons and positrons (of combined mass m) to get produced through Einstein’s famous mass-energy equivalence relation: E = mc².

When particle-antiparticle pairs get produced, the radiation pressure drops, causing the core to contract and heat up further, which in turn causes more particle-antiparticle pairs to get produced, which drops the pressure further, etc. In short order, a runaway fusion reaction occurs, and the entire star is torn apart in an enormous explosion.

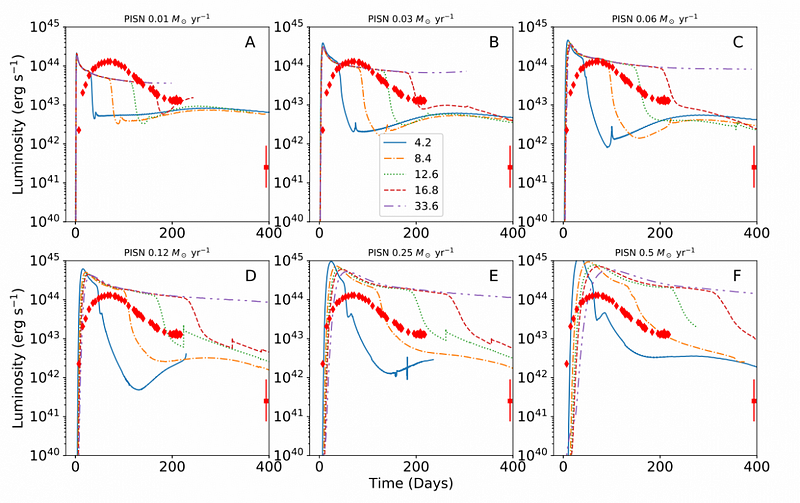

Until this year, the pair-instability mechanism was the leading idea for explaining superluminous supernovae. But in a new paper, Anders Jerkstrand, Keiichi Maeda, and Koji S. Kawabata showed that the pair instability mechanism would have led to a light-curve that failed to match the actual observations.

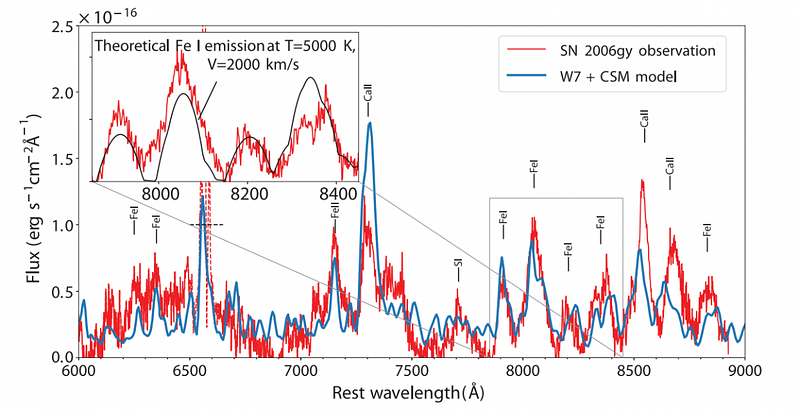

What the authors noted, though, was remarkable: a little more than a year after the initial explosion, when the light had dimmed to be just a fraction of the brightness of one of the more typical supernovae, about half a solar mass’s worth of radioactive nickel had decayed into iron, and that enormous amount of iron was showing up in the spectral light of the supernova remnant at around 800 nanometers in wavelength.

Such an emission feature had never been seen before, and certainly wasn’t anticipated. A detailed breakdown of the spectrum revealed not only iron, but also the heavy elements sulfur and calcium, indicating that a large amount of mass was needed to exist in the region of space surrounding the star before it went supernova. Something must have ejected a large amount of this heavy element in its unionized state, which seems to fit the idea of an earlier, recent phase of silicon-burning.

The fact that there’s no neutral oxygen, coupled with the insufficiency of a pair-instability solution to match the light-curve, leaves only one viable possibility left: a type Ia supernova, ignited by a white dwarf star, could have exploded and broken through a shroud of enriched circumstellar material.

Although these spectral features, on their own, could be explained either by an exploding white dwarf or a pair-instability supernova surrounded by a large amount of circumstellar material, the combination of this data with the observed light curve in its earlier phases rules out the pair-instability scenario, leaving only a detonating white dwarf as the culprit.

As the authors note, the idea that a type Ia supernova could have detonated and been responsible for SN 2006gy is a very old one, but simply fell out of fashion as ultra-massive progenitor stars were what most analyses chose to focus on.

If the authors’ conclusion is correct, it means that this material surrounding the superluminous supernova was ejected between one decade and two centuries before the supernova explosion, and that the very massive star at the core of this system — likely a giant or supergiant star — must have had a white dwarf companion, which could only have been created if it entered the giant phase first, and had its outer material stripped away by its massive partner.

What still isn’t understood is how the two cores of the two separate stars merge and explode. As the authors note:

These steps are rarely explored in inspiral simulations, because of computational difficulties, although some results have shown that less-evolved giants merge more easily. Material may also form a disk around the two cores that could drive the final stages of merging.

Either way, this represents a new step forward towards understanding the most energetic stellar cataclysms in the Universe: superluminous supernovae. Even though hydrogen was present in narrow lines, leading to an initial classification as a type IIn supernova, the full suite of data is better fit by a white dwarf core merging with a giant or supergiant’s core, with the supernova’s ejecta crashing into a large amount of circumstellar material that had been previously ejected.

While there’s a whole lot we’ve learned from SN 2006gy, the closest superluminous supernova, many others have been seen with similarities, but none were close enough to detect iron lines so long after the initial explosion took place. Is a white dwarf merging with a giant or supergiant core the way all superluminous supernovae are created? Or is SN 2006gy rare, or do we perhaps even have it wrong after all? Whatever the case, we’re one step closer to understanding what causes the most energetic stellar cataclysms ever seen in the Universe.

Ethan Siegel is the author of Beyond the Galaxy and Treknology. You can pre-order his third book, currently in development: the Encyclopaedia Cosmologica.