Scientists Can’t Agree On The Expanding Universe

It’s either a cosmic mystery or a terribly mundane mistake.

The Universe is expanding, and every scientist in the field agrees with that. The observations overwhelmingly support that straightforward conclusion, and every alternative has failed to match its successes since the late 1920s. But in scientific endeavors, success cannot simply be qualitative; we need to understand, measure, and quantify the Universe’s expansion. We need to know how much the Universe is expanding by.

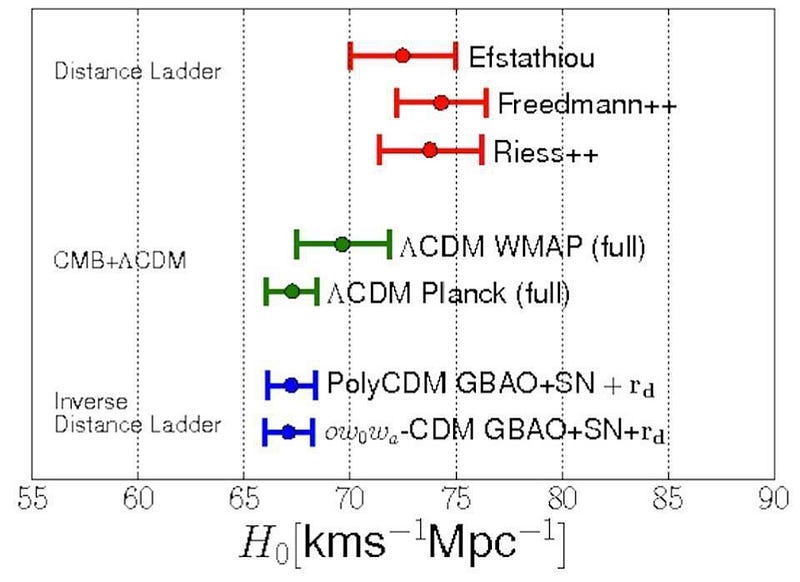

For generations, astronomers, astrophysicists and cosmologists attempted to refine our measurements of the rate of the Universe’s expansion: the Hubble constant. After many decades of debates, the Hubble Space Telescope key project appeared to solve the issue: 72 km/s/Mpc, with just a 10% uncertainty. But now, 17 years later, scientists can’t agree. One camp claims ~67 km/s/Mpc; the other claims ~73 km/s/Mpc, and the errors do not overlap. Something, or someone, is wrong, and we cannot figure out where.

The reason this is such a problem is because we have two major ways of measuring the expansion rate of the Universe: through the cosmic distance ladder and through looking at the signals originating from the earliest moments of the Big Bang. The two methods are extremely different.

- For the distance ladder, we look at nearby, well-understood objects, then observe those same types of objects in more distant locations, then infer their distances, then use properties we observe at those distances to go even farther, etc. By building up redshift and distance measurements, we can reconstruct the expansion rate of the Universe.

- For the early signals method, we can use either the leftover light from the Big Bang (the Cosmic Microwave Background) or the correlation distances between distant galaxies (from Baryon Acoustic Oscillations) and see how those signals evolve over time as the Universe expands.

The first method seems to be giving the higher figure of ~73 km/s/Mpc, consistently, while the second gives ~67 km/s/Mpc.

This should trouble you deeply. If we understand the way the Universe works correctly, then every method we use to measure it should deliver the same properties and the same story about the cosmos we inhabit. Whether we use red giant stars or blue variable stars, rotating spiral galaxies or face-on spirals with fluctuating brightness, swarming elliptical galaxies or Type Ia supernovae, or the Cosmic Microwave Background or galaxy correlations, we should get an answer that’s consistent with a Universe having the same properties.

But that’s not what happens. The distance ladder method systematically gives a higher value by about 10% than the early signals method, regardless of how we measure the distance ladder or which early signal we use. Here’s the most accurate method for each one.

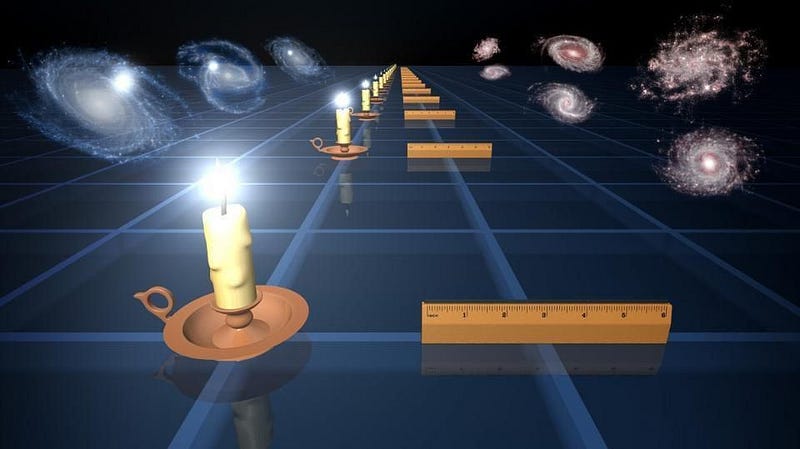

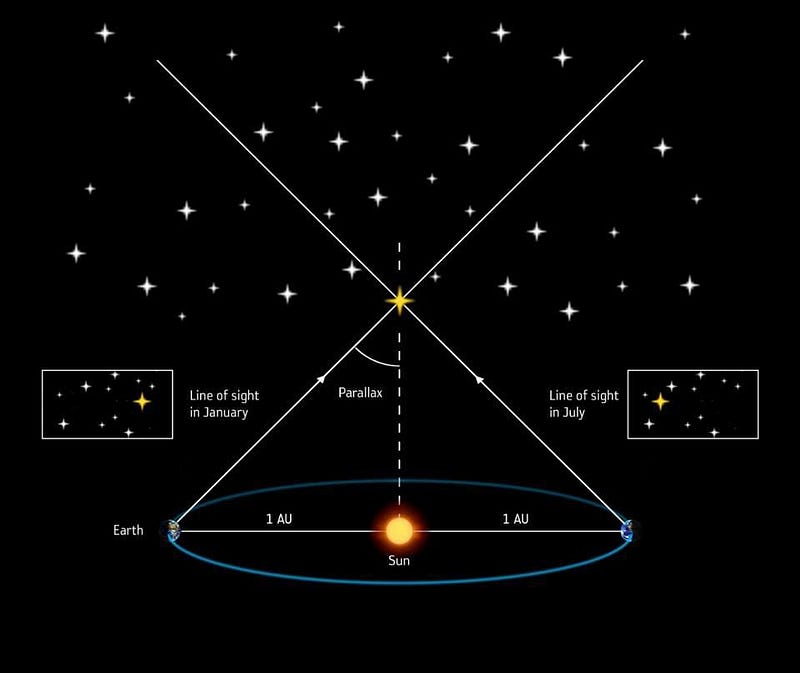

1.) The distance ladder: start with the stars in our own galaxy. Measure their distance using parallax, which is how a star’s apparent position shifts over the course of an Earth year. As our world moves around the Sun, the apparent position of a nearby star will shift relative to the background ones; the amount of shift tells us the star’s distance.

Some of those stars will be Cepheid variable stars, which display a specific relationship between their luminosity (intrinsic brightness) and their period of pulsation: Leavitt’s Law. Cepheids are abundant within our own galaxy, but can also be seen in distant galaxies.

And in some of these distant, Cepheid-containing galaxies, there are also Type Ia supernovae which have been observed to occur. These supernovae can be observed all throughout the Universe, from right here in our cosmic backyard to galaxies located many billions or even tens of billions of light years away.

With just three rungs:

- measuring the parallax of stars in our galaxy, including some Cepheids,

- measuring Cepheids in nearby galaxies up to 50–60 million light years away, some of which contain(ed) Type Ia supernovae,

- and then measuring Type Ia supernovae to the distant recesses of the expanding Universe,

we can reconstruct what the expansion rate is today, and how that expansion rate has changed over time.

2.) The early signals: alternatively, start with the Big Bang, and the knowledge that our Universe is filled with dark matter, dark energy, normal matter, neutrinos, and radiation.

What’s going to happen?

The masses are going to attract one another and attempt to undergo gravitational collapse, with the denser regions attracting more and more of the surrounding matter. But the change in gravity leads to a pressure change, causing radiation to stream out of these regions, working to suppress gravitational growth.

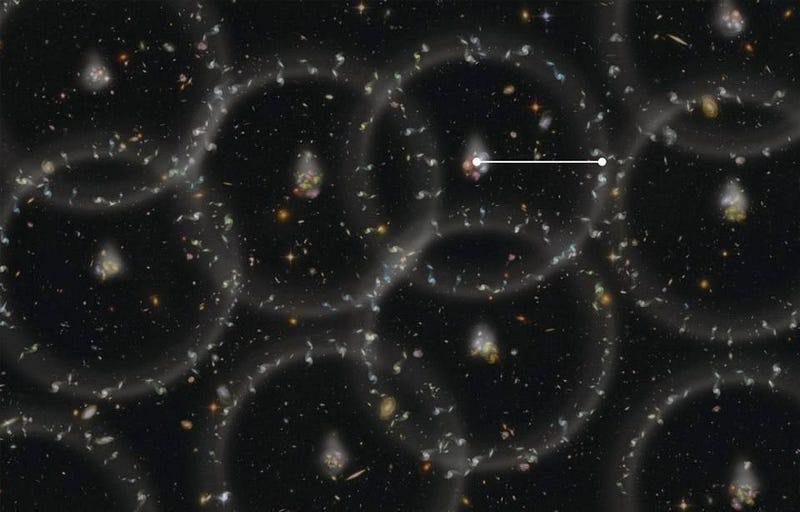

The fun thing is this: the normal matter has an interaction cross-section with the radiation, but the dark matter doesn’t. This leads to a specific “acoustic pattern” where normal matter experiences these bounces and compressions from the radiation.

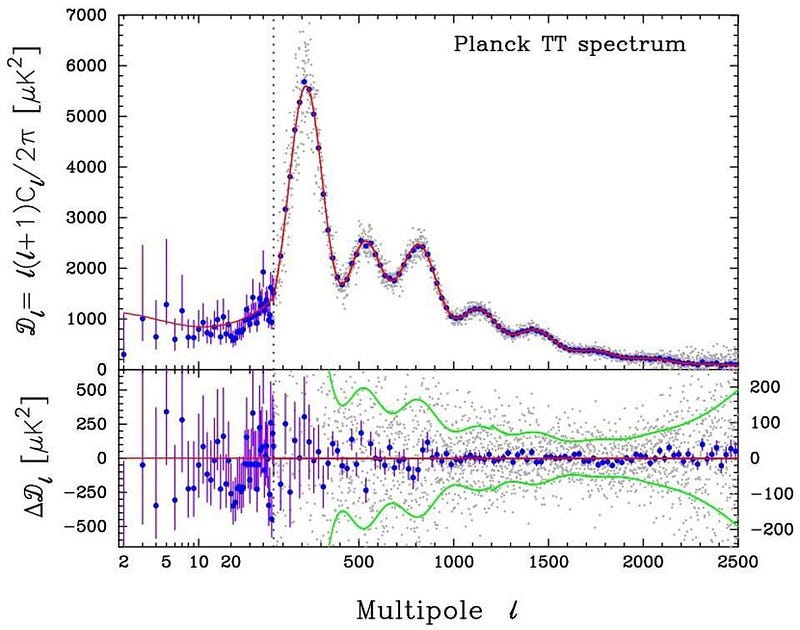

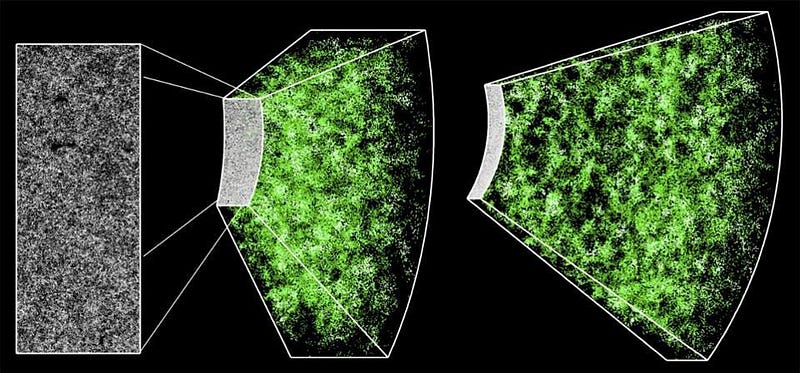

This shows up with a particular set of peaks in the temperature fluctuations of the Cosmic Microwave Background, and a specific distance scale for where you’re more likely to find a galaxy than either closer or farther away. As the Universe expands, these acoustic scales change, which should lead to signals in both the Cosmic Microwave Background (two images up) and the scales at which galaxies cluster (one image up).

By measuring what these scales are and how they change with distance/redshift, we can also get an expansion rate for the Universe. While the distance ladder method gives a rate of about 73 ± 2 km/s/Mpc, both of these early signal methods give 67 ± 1 km/s/Mpc. The numbers are different, and they don’t overlap.

There are a lot of potential explanations. It’s possible that the nearby Universe has different properties than the ultra-distant, early Universe did, and so both teams are correct. It’s possible that dark matter or dark energy (or something mimicking them) is changing over time, leading to different measurements using different methods. It’s possible that there’s some new physics or something tugging on our Universe from beyond the cosmic horizon. Or, perhaps, that there’s some fundamental flaw with our cosmological models.

But these possibilities are the fantastic, spectacular, sensational ones. They might get the overwhelming majority of the press and prestige, as they’re imaginative and clever. But there’s also a much more mundane possibility that is far more likely: the Universe is simply the same everywhere, and one of the measurement techniques is inherently biased.

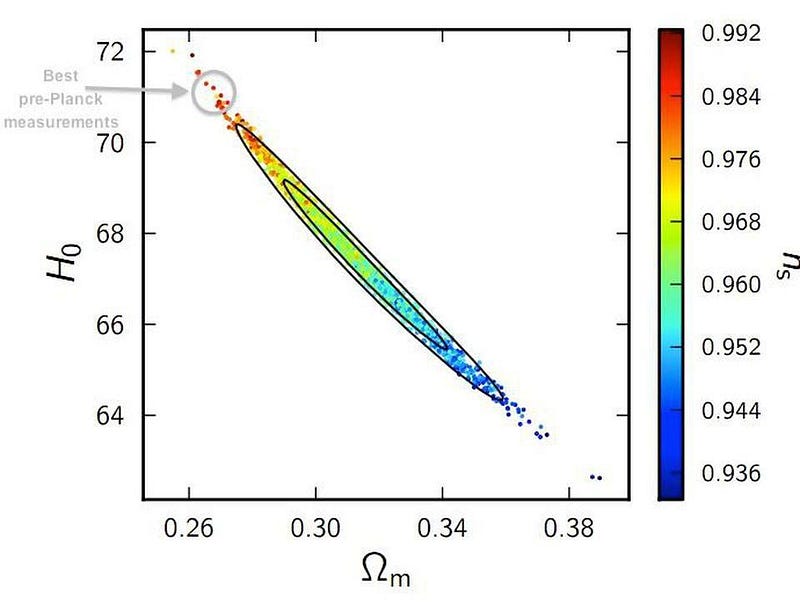

It’s hard to identify the potential biases in the early signal methods, because the measurements from WMAP, Planck, and the Sloan Digital Sky Survey are so precise. In the cosmic microwave background, for example, we have measured very well the matter density of the Universe (about 32% ± 2%) and the scalar spectral index (0.968 ± 0.010). With those measurements in place, it’s very difficult to get a figure for the Hubble constant that’s greater than about 69 km/s/Mpc, which is really the upper limit.

There may be errors there that bias us, but we have a hard time enumerating what they could be.

For the distance ladder method, however, they’re plentiful:

- Our parallax methods may be biased by the gravity from our local solar neighborhood; the bent spacetime surrounding our Sun could be systematically altering our distance determinations.

- We are limited in our understanding of the Cepheids, including the fact that there are two types of them and some of them lie in non-pristine environments.

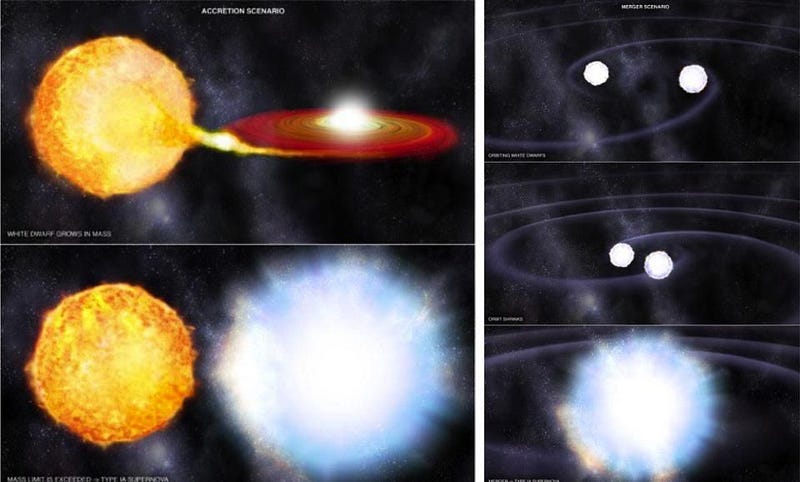

- And Type Ia supernovae can be caused by either accreting white dwarfs or colliding-and-merging white dwarfs, the environments they’re in may evolve over time, and there may yet be more to the mystery of how they’re made than we presently understand.

The discrepancy between these two different ways of measuring the expanding Universe may simply be a reflection of our overconfidence in how small our errors actually are.

The question of how quickly the Universe is expanding is one that has troubled astronomers and astrophysicists since we first expansion was occurring at all. It’s an incredible achievement that multiple, independent methods yield answers that are consistent to within 10%, but they don’t agree with each other, and that’s troubling.

If there’s an error in parallax, Cepheids, or supernovae, the expansion rate may truly be on the low end: 67 km/s/Mpc. If so, the Universe will fall into line when we identify our mistake. But if the Cosmic Microwave Background group is mistaken, and the expansion rate is closer to 73 km/s/Mpc, it foretells a crisis in modern cosmology. The Universe cannot have the dark matter density and initial fluctuations 73 km/s/Mpc would imply.

Either one team has made an unidentified mistake, or our conception of the Universe needs a revolution. I’m betting on the former.

Ethan Siegel is the author of Beyond the Galaxy and Treknology. You can pre-order his third book, currently in development: the Encyclopaedia Cosmologica.