Is there really a cosmological constant? Or is dark energy changing with time?

Constant? Not-a-constant? Or is there a fundamental flaw in the way we’re doing business?

This article was written by Sabine Hossenfelder. Sabine is a theoretical physicist specialized in quantum gravity and high energy physics. She also freelance writes about science.

“If you’re puzzled by what dark energy is, you’re in good company.”

–Saul Perlmutter

According to physics, the universe and everything in it can be explained by just a handful of equations. They’re difficult equations, all right, but their simplest feature is also the most mysterious one. The equations contain a few dozen parameters that are — for all we presently know — unchanging, and yet these numbers determine everything about the world we inhabit. Physicists have spent much brainpower questioning where these numbers come from, whether they could have taken any other values than the ones we observe, and whether their exploring their origin is even within the realm of science.

One of the key questions when it comes to these parameters is whether they are really constant, or whether they are time-dependent. If they vary, then their time-dependence would have to be determined by yet another equation, which would change the entire story that we currently tell about our Universe. If even one of the fundamental constants isn’t truly a constant, it would open the door to an entirely new subfield of physics.

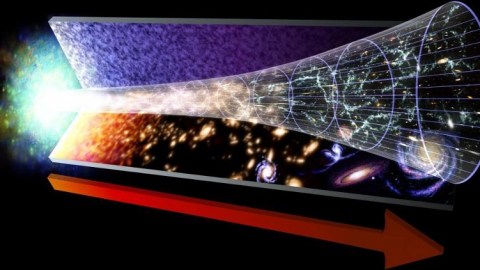

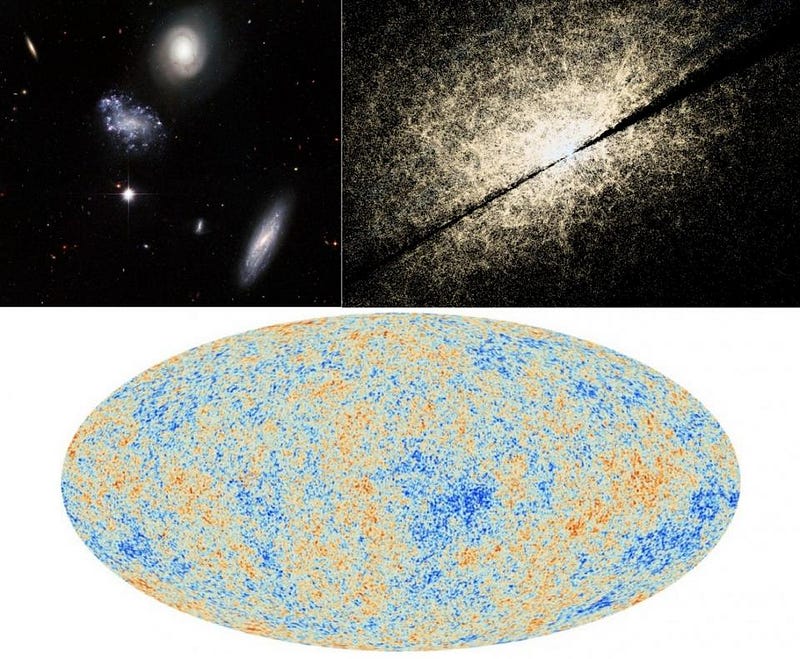

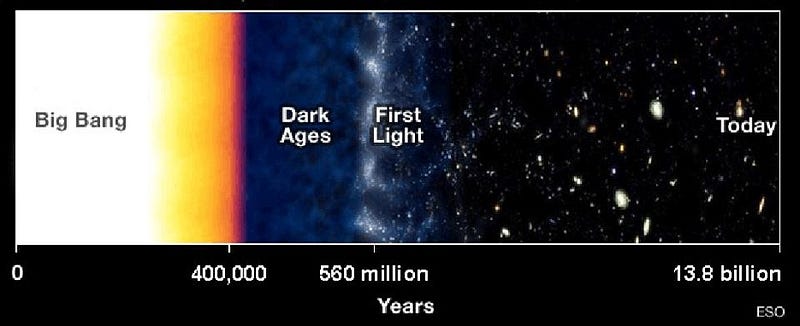

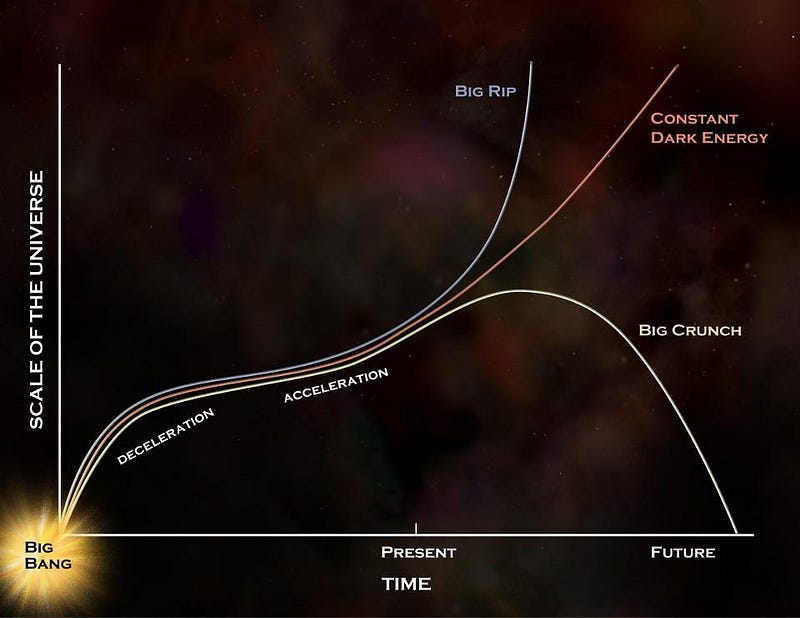

Perhaps the best-known parameter of all is the cosmological constant: the zero-point energy of empty space itself. It is what causes the universe’s expansion to accelerate. The cosmological constant is usually assumed to be, well, a constant. If it isn’t, it can be more generally referred to as ‘dark energy.’ If our current theories for the cosmos are correct, our universe will expand forever into a cold and dark future.

The value of the cosmological constant is infamously the worst prediction ever made using quantum field theory; the math says it should be 120 orders of magnitude larger than what we observe. But that the cosmological constant has a small, non-zero value that causes the Universe to accelerate is extremely well established by measurement. The evidence is so thoroughly robust that a Nobel Prize was awarded for its discovery in 2011.

Exactly what the value of the cosmological constant is, though, is controversial. There are different ways to measure the cosmological constant, and physicists have known for a few years that the different measurements give different results. This tension in the data is difficult to explain, and it has so-far remained unresolved.

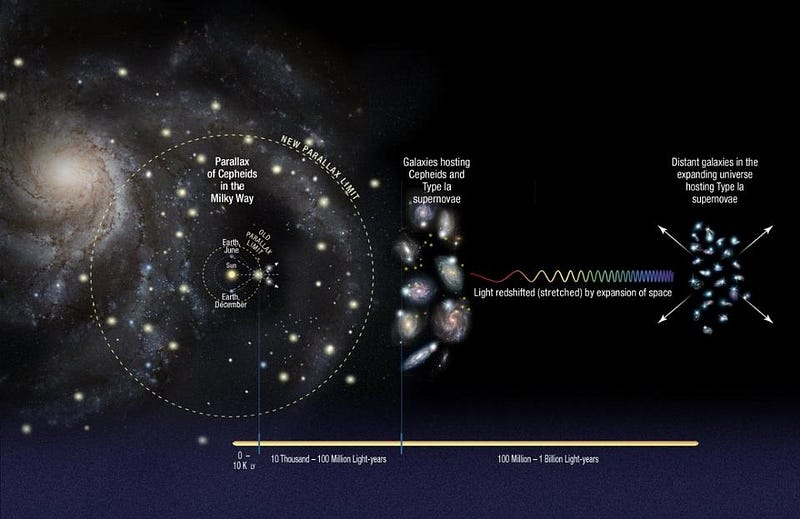

One way to determine the cosmological constant is by using the cosmic microwave background (CMB). The small temperature fluctuations between different locations and scales in the CMB encode density variations in the early universe and the subsequent changes in the radiation streaming from those locations. From fitting the CMB’s power spectrum with the parameters that determine the expansion of the universe, physicists get a value for the cosmological constant. The most accurate of all such measurements is currently the data from the Planck satellite.

Another way to determine the cosmological constant is to deduce the expansion of the universe from the redshift of the light from distant sources. This is the way the Nobel-Prize winners made their original discoveries in the late 1990s, and the precision of this method has since been improved. In addition, there are now multiple ways to make this measurement, where the results are all in general agreement with one another.

But these two ways to determine the cosmological constant give results that differ with a statistical significance of 3.4-σ. That’s a probability of less than one in thousand to be due to random data fluctuations, but admittedly not strong enough to rule out statistical variations. Multiple explanations for this have since been proposed. One possibility is that it’s a systematic error in the measurement, most likely in the CMB measurement from the Planck mission. There are reasons to be skeptical, because the tension goes away when the finer structures (the large multipole moments) of the data are omitted. In addition, incorrect foreground subtractions may be continuing to skew the data, as they did in the infamous BICEP2 announcement. For many astrophysicists, these are indicators that something’s amiss either with the Planck measurement or the data analysis.

But maybe it’s a real effect after all. In this case, several modifications of the standard cosmological model have been put forward. They range from additional neutrinos to massive gravitons to actual, bona fide changes in the cosmological constant.

The idea hat the cosmological constant changes from one place to the next is not an appealing option because this tends to screw up the CMB spectrum too much. But currently, the most popular explanation for the data tension in the literature seems to be a time-varying cosmological constant.

A group of researchers from Spain, for example, claims that they have a stunning 4.1-σ preference for a time-dependent cosmological constant over an actually constant one. This claim seems to have been widely ignored, and indeed one should be cautious. They test for a very specific time-dependence, and their statistical analysis does not account for other parameterizations that might instead be tried. (The theoretical physicist’s variant of post-selection bias.) Moreover, they fit their model not only to the two above mentioned datasets, but to a whole bunch of others at the same time. This makes it hard to tell why their model seems to work better. A couple of cosmologists who I asked about this remarkable result and why it has been ignored complained that the Spanish group’s method of data analysis is non-transparent.

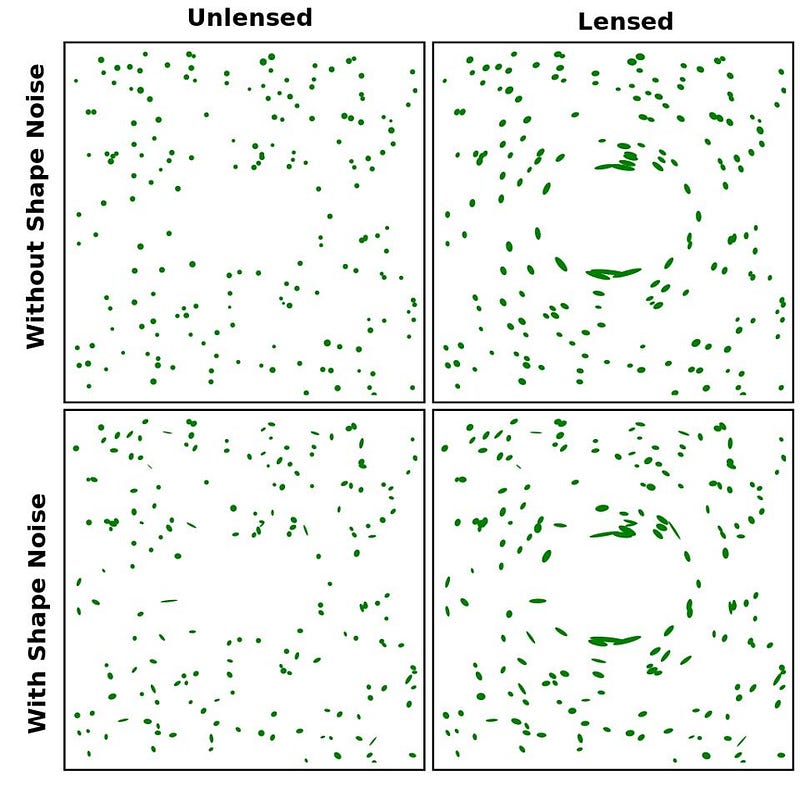

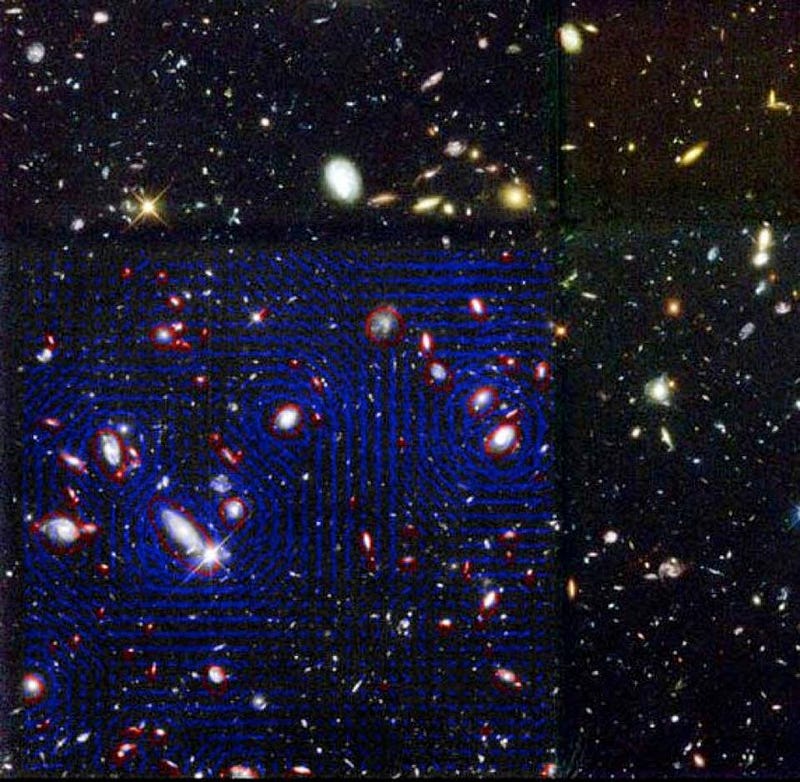

Be that as it may, just when I put the Spaniards’ paper away, I saw another paper that supported their claim with an entirely independent study based on weak gravitational lensing. Weak gravitational lensing happens when a foreground galaxy distorts the image shapes of more distant, background galaxies. The qualifier ‘weak’ sets this effect apart from strong lensing, which is caused by massive nearby objects — such as black holes — and deforms point-like sources to arcs, rings, and multiple images. Weak gravitational lensing, on the other hand, is not as easily recognizable and must be inferred from the statistical distribution of the ellipticities of galaxies.

The Kilo Degree Survey (KiDS) has gathered and analyzed weak lensing data from about 15 million distant galaxies. While their measurements are not sensitive to the expansion of the universe, they are sensitive to the density of dark energy, which affects the way light travels from the galaxies towards us. This density is encoded in a cosmological parameter imaginatively named σ_8, which measures the amplitude of the matter power spectrum on scales of 8 Mpc/h, where h is related to the Hubble expansion rate. Their data, too, is in conflict with the CMB data from the Planck satellite.

The members of the KiDS collaboration have tried out which changes to the cosmological standard model work best to ease the tension in the data. Intriguingly, it turns out that ahead of all explanations, the one that works best has the cosmological constant changing with time. The change is such that the effects of accelerated expansion are becoming more pronounced, not less.

In summary, it seems increasingly unlikely the tension in the cosmological data is due to chance. Cosmologists are justifiably cautious, and most of them bet on a systematic problem with either the Planck data or, alternatively, with the calibration of the cosmic distance ladder. However, if these measurements receive independent confirmation, the next best bet is on time-dependent dark energy. It won’t make our future any brighter, though. Even if dark energy changes with time, all indications point towards the universe continuing to expand, forever, into cold darkness.

Ethan Siegel is the author of Beyond the Galaxy and Treknology. You can pre-order his third book, currently in development: the Encyclopaedia Cosmologica.