How To Spot A Bad Scientific Theory

Our biases, preferences, and ideas of simplicity and elegance can get in the way. Here’s a scientific way to cut through them all.

What are the rules governing reality? If you can determine what the actual laws of nature are, you’d be able to successfully predict the outcome of any experiment. You could create any physical setup you dreamed up, and you’d know how it would behave as you moved forward in time. Even within the parameters of quantum mechanics, you’d be able to give an exact probability distribution, with reality matching what you’d observe time and time again.

That’s the dream of any scientist who works with a theory: to come up with something so successful that its predictive and post-dictive powers are correct every time. In 2018, we’re closer than we’ve ever been to getting it right across the board. But there are rules to theorizing successfully, and if you violate them, your theory won’t just be wrong; it will be bad science.

Whenever we observe a phenomenon occur in the Universe, our curiosity compels us to attempt to understand what caused it. It isn’t enough to describe it with a poetic picture or an analogy; we demand a quantitative description of what happens, when, and by what amount. We seek to understand what processes drive this phenomenon, and how these processes create the observed effect of the exact magnitude observed.

And we want to be able to apply our rules to systems we haven’t yet observed or measured, to predict novel behavior that wouldn’t arise in other formulations. Ideas are a dime-a-dozen, but good ideas are extremely rare. The simple reason why? Most ideas assume too much and predict too little. There’s a science to how this all works.

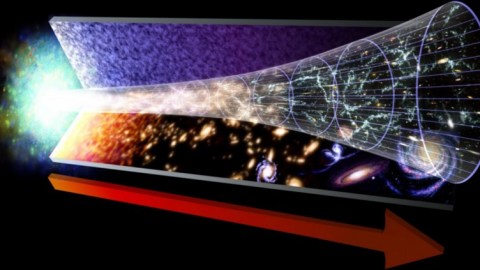

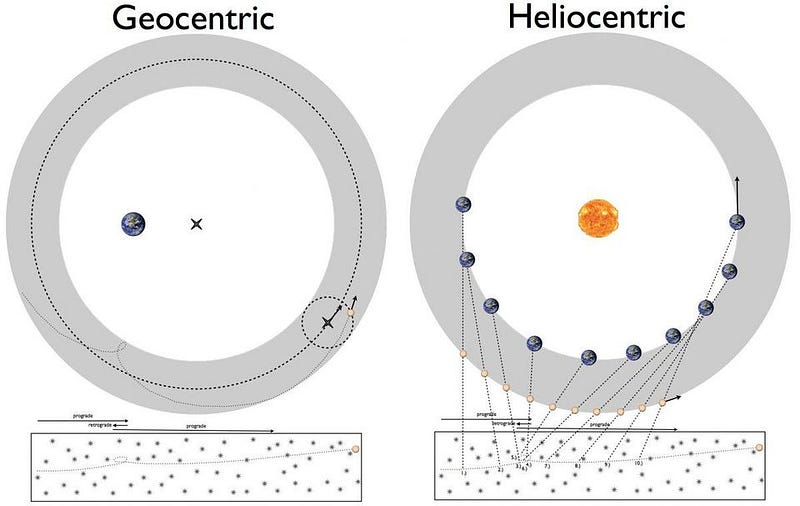

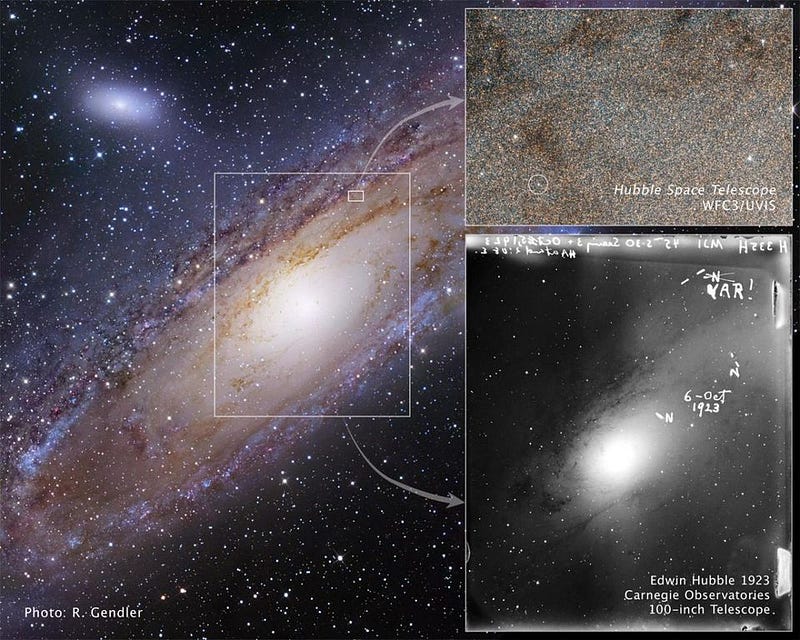

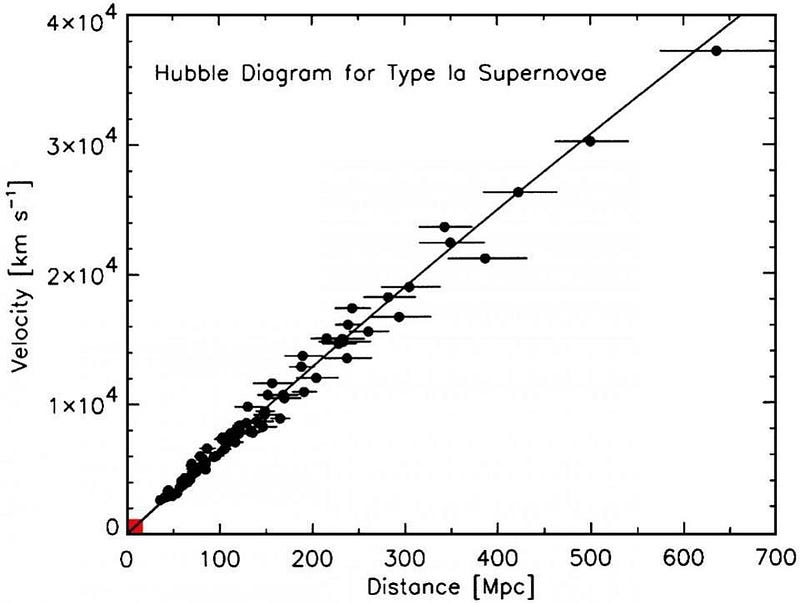

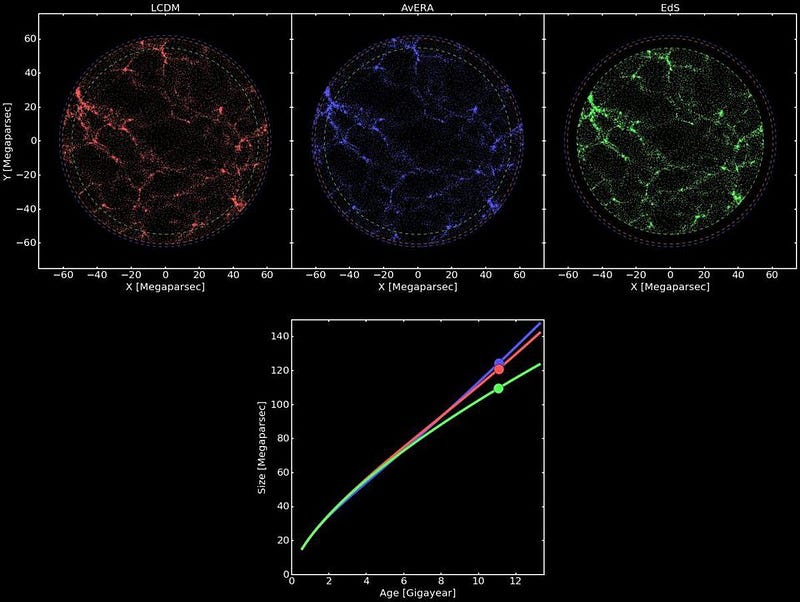

Take the expanding Universe, for example. When we look out at galaxies outside of the Milky Way, we can measure individual stars within them. Since we measure stars within our own galaxy, too, and believe (to a great degree of accuracy) that we understand how stars work, when we measure the same types of stars elsewhere, we can use that information to determine how far away they are. Get enough of these measurements for the right types of stars, and you can derive how far away these galaxies are.

Add onto that the fact that light appears redshifted from these galaxies, and we can infer one of two things:

- either the distant galaxies are moving away from us, and their light appears redder because of their motion relative to us,

- or the space between those galaxies and ourselves is expanding, causing the wavelength of that light to lengthen and become redder along its journey.

Either one of these would be consistent with the known laws of physics, making them both great candidate explanations. When we look at the distance-redshift relation for nearby galaxies, we can see that it doesn’t discriminate between these two possibilities.

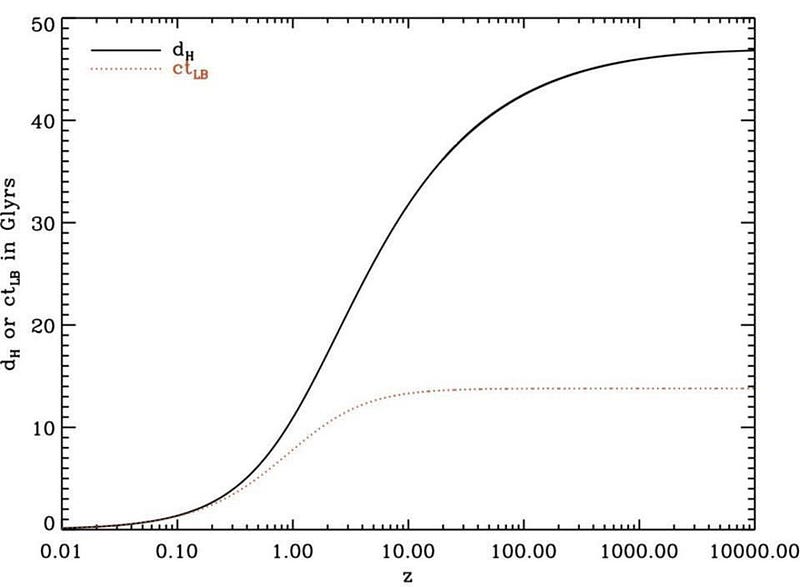

This is a reasonable way to begin theorizing! See a phenomenon, and come up with a plausible explanation (or multiple plausible explanations) for what you’ve observed. Both of these ideas would have consequences for the Universe, however. If distant galaxies were moving away from us, then you’d reach a point where you were limited by the speed of light: the ultimate speed limit of the Universe.

But if the space between galaxies were expanding, there’s no limit to the amount of redshift we could observe. At very great distances, we’d see a difference between these two explanations. All biases aside, if you can make a physical prediction based on your theory that’s unique and powerful, then testing it out will be the decisive factor.

The fact that we can use a theory to make a unique and powerful prediction is one of the hallmarks of what separates a good scientific theory from a bad one. If your theory doesn’t make predictions, it’s pretty useless as far as physics is concerned. This is a charge that’s often correctly leveled against string theory, whose predictions are all but untestable in practice.

But when the charge is leveled against cosmic inflation, it’s completely unfair. Inflation has made no fewer than six unique predictions that were untested when it was proposed, and four of them have been validated already, with the other two awaiting better experiments to test them. Your theory, in order to be of any quality, must be testable against the alternatives.

It also must not be unnecessarily complicated. There are a lot of mysteries in the Universe today, from tiny-scale phenomena like why neutrinos have mass to dark matter and dark energy on the largest scales. There are a myriad of models out there to explain these (and other) puzzles, but most of the theoretical ideas out there are quite bad.

Why?

Because most of them invoke a whole suite of new physics to explain just one otherwise inexplicable observation.

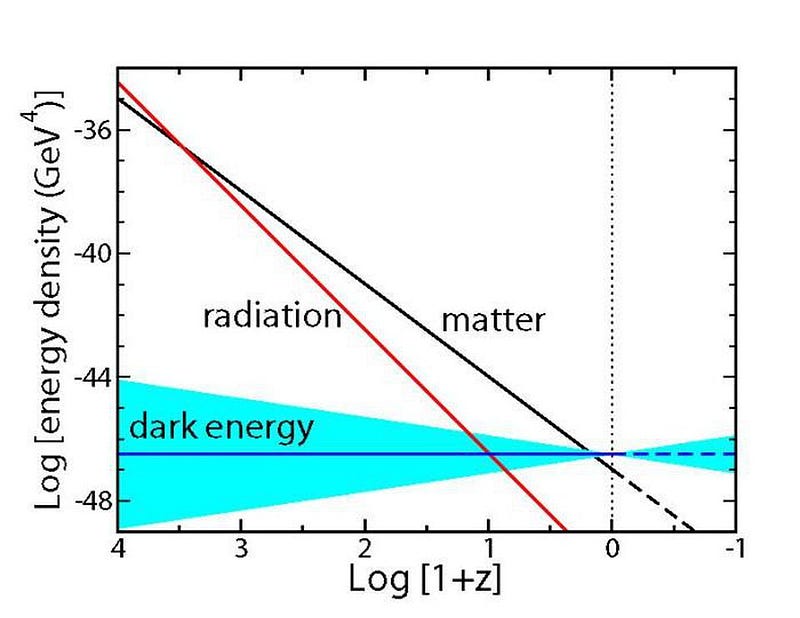

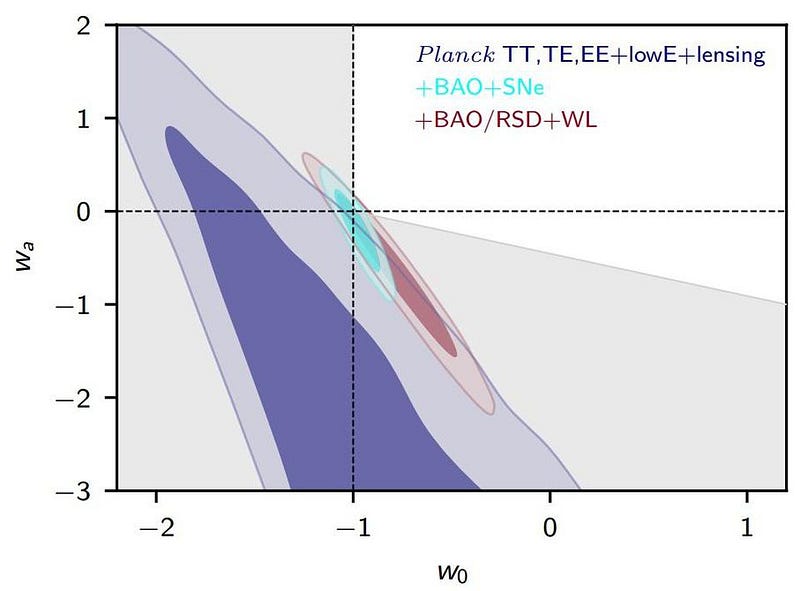

Take dark energy for instance. It is, at present, completely explicable by adding just one new parameter — a cosmological constant — to our best known theory of gravity, General Relativity. But there are alternative explanations that could also do the job.

- Dark energy might be a new field, with a non-constant equation of state and/or a magnitude that changes over time.

- It might be connected to inflation via a quintessence-like field.

- Or General Relativity might be replaced by any alternative we could contrive that aren’t already ruled out by the existing data.

These explanations are all important to keep in mind as possibilities, but they’re also examples of a speculative scientific theory that no one should believe.

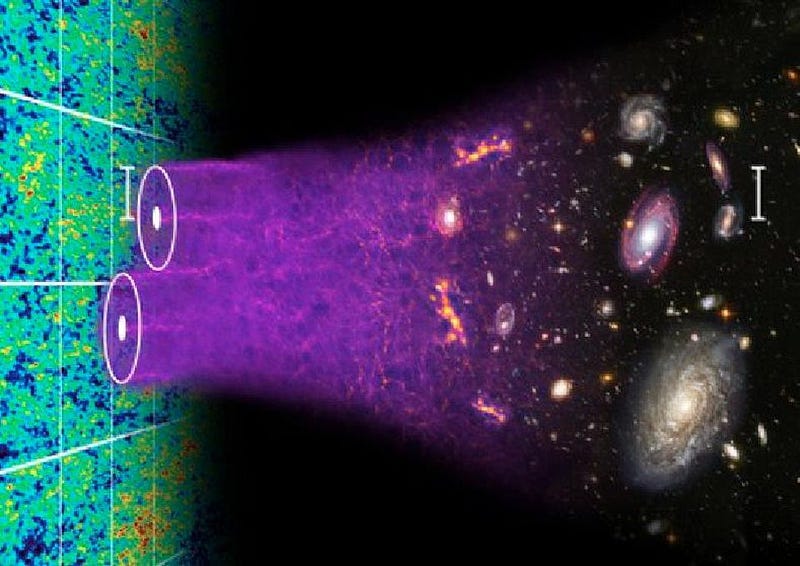

Why not? Because these alternative explanations aren’t doing anything meaningfully better than the default, “vanilla” explanation of a cosmological constant. The full suite of data we have concerning the behavior of dark energy — including supernovae, gamma-ray bursts, baryon acoustic oscillations, the cosmic microwave background, and the large-scale clustering data — show no evidence for any of these.

There are no unsolved puzzles or observational problems that arise with the standard view of dark energy. In other words, there’s no motivation to unnecessarily complicate matters. Like Russell’s teapot, just because something isn’t ruled out doesn’t mean it’s worth considering.

The burden of proof is on any theorist to demonstrate that their new model has a compelling motivation. Historically, that motivation has come in the form of unexplained data, which cries out for explanation and which cannot be explained without some sort of new physics. If it can be explained without new physics, that’s the route you should take. History has shown that path to be almost always correct.

If you can explain what your standard theory doesn’t explain, with one new field, one new particle, or one new interaction, that’s the next path you should attempt. Ideally, you’ll explain multiple observations with this new parameter of your theory, and it will lead to new predictions you can go out and test.

But adding more and more modifications to your theory — making your model objectively more complicated — will of course have the power to offer you a better fit to the data. In general, the number of new free parameters your idea introduces should be far smaller than the number of new things it purports to explain. The great power of science is in its ability to predict and explain what we see in the Universe. The key is to do it as simply as possible, but not to oversimplify it any further than that.

Bad scientific theories abound, rife with unnecessary complications, extra sets of parameters, and unconstrained, ill-motivated speculations. Unless there’s a reality check coming, in the form of experimental or observational data, it isn’t worth wasting your time on.

Ethan Siegel is the author of Beyond the Galaxy and Treknology. You can pre-order his third book, currently in development: the Encyclopaedia Cosmologica.