Dark Matter’s Biggest Problem Might Simply Be A Numerical Error

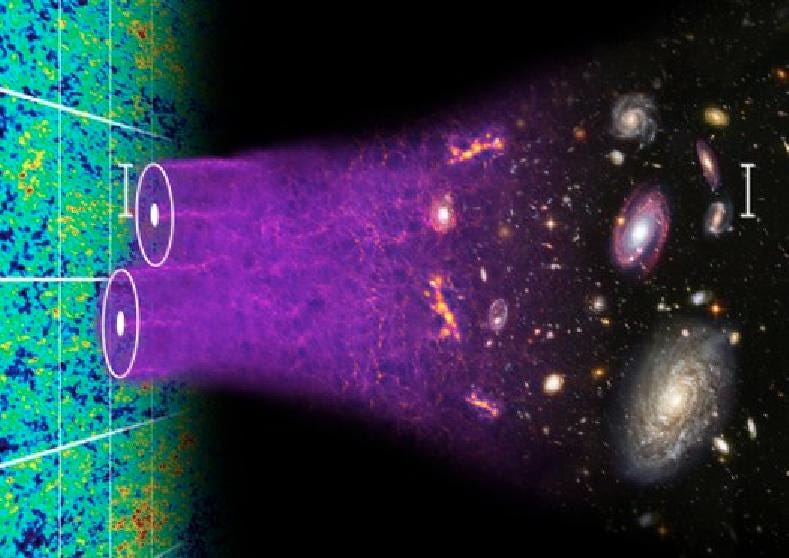

It’s one of cosmology’s biggest unsolved mysteries. The strongest argument against it may have just evaporated.

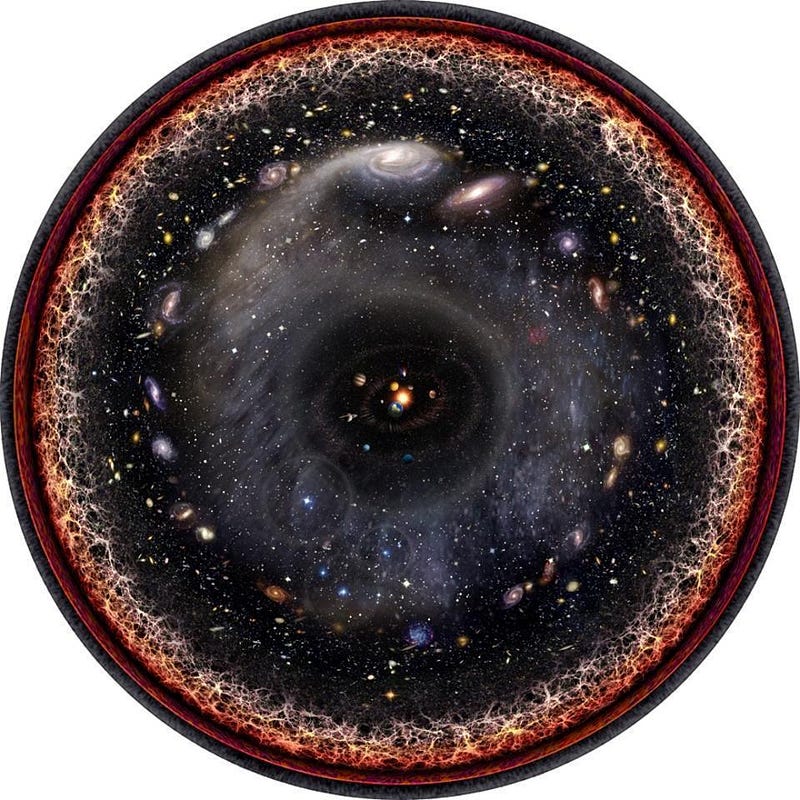

The ultimate goal of cosmology contains the greatest ambition of any scientific field: to understand the birth, growth, and evolution of the entire Universe. This includes every particle, antiparticle, and quantum of energy, how they interact, and how the fabric of spacetime evolves alongside them. In principle, if you can write down the initial conditions describing the Universe at some early time — including what it’s made of, how those contents are distributed, and what the laws of physics are — you can simulate what it will look like at any point in the future.

In practice, however, this is an enormously difficult task. Some calculations are easy to perform, and connecting our theoretical predictions to observable phenomena is clear and easy. In other instances, that connection is much harder to make. These connections provide the best observational tests of dark matter, which today makes up 27% of the visible Universe. But one test, in particular, is a test that dark matter has failed over and over. At last, scientists might have figured out why, and the entire thing might be no more than a numerical error.

When you think about the Universe as it is today, you can immediately recognize how different it appears when you examine it on a variety of length scales. On the scale of an individual star or planet, the Universe is remarkably empty, with only the occasional solid object to run into. Planet Earth, for example, is some ~10³⁰ times denser than the cosmic average. But as we go to larger scales, the Universe begins to appear much smoother.

An individual galaxy, like the Milky Way, might be only about a few thousand times denser than the cosmic average, while if we examine the Universe on the scales of large galaxy groups or clusters (spanning some ~10-to-30 million light years), the densest regions are just a few times denser than a typical region. On the largest scales of all — of a billion light-years or more, where the largest features of the cosmic web appear — the Universe’s density is the same everywhere, down to a precision of about 0.01%.

If we model our Universe in accordance with the best theoretical expectations, as supported by the full suite of observations, we expect that it began filled with matter, antimatter, radiation, neutrinos, dark matter, and a tiny bit of dark energy. It should have begun almost perfectly uniform, with overdense and underdense regions at the 1-part-in-30,000 level.

In the earliest stages, numerous interactions all happen simultaneously:

- gravitational attraction works to grow the overdense regions,

- particle-particle and photon-particle interactions work to scatter off of (and impart momentum to) the normal matter (but not the dark matter),

- and radiation free-streams out of overdense regions that are small enough in scale, washing out structure that forms too early (on too small of a scale).

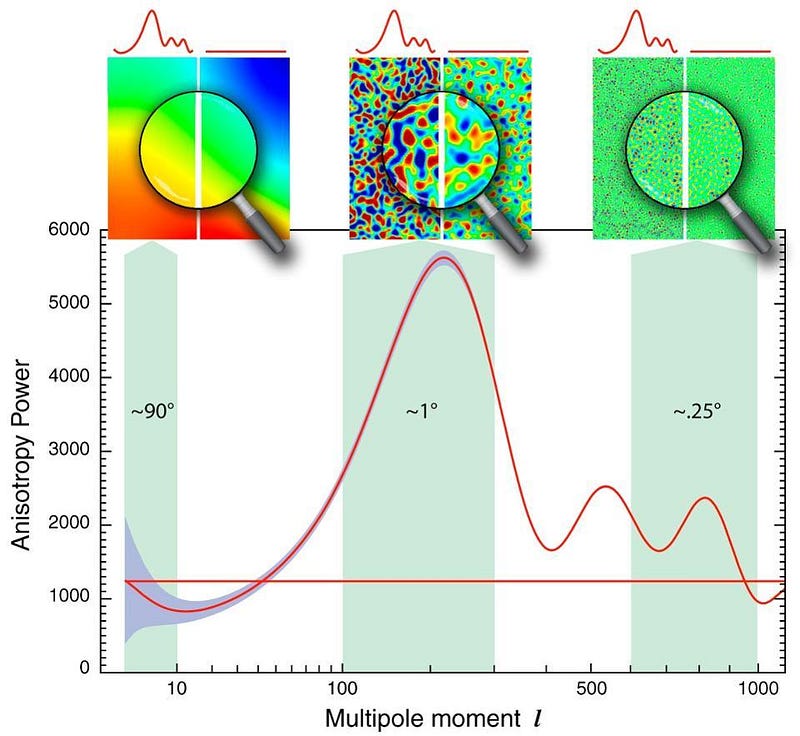

As a result, by the time the Universe is 380,000 years old, there’s already an intricate pattern of density and temperature fluctuations, where the largest fluctuations occur on a very specific scale: where the normal matter maximally collapses in and the radiation has minimal opportunity to free-stream out. On smaller angular scales, the fluctuations exhibit periodic peaks-and-valleys that decline in amplitude, just as you’d theoretically predict.

Because the density and temperature fluctuations — i.e., the departure of the actual densities from the average density — are still so small (much smaller than the average density itself), this is an easy prediction to make: you can do it analytically. This pattern of fluctuations should show up, observationally, in both the large-scale structure of the Universe (showing correlations and anti-correlations between galaxies) and in the temperature imperfections imprinted in the Cosmic Microwave Background.

In physical cosmology, these are the kinds of predictions that are the easiest to make from a theoretical perspective. You can very easily calculate how a perfectly uniform Universe, with the same exact density everywhere (even if it’s mixed between normal matter, dark matter, neutrinos, radiation, dark energy, etc.), will evolve: that’s how you calculate how your background spacetime will evolve, dependent on what’s in it.

You can add in imperfections on top of this background, too. You can extract very accurate approximations by modeling the density at any point by the average density plus a tiny imperfection (either positive or negative) superimposed atop it. So long as the imperfections remain small compared to the average (background) density, the calculations for how these imperfections evolve remains easy. When this approximation is valid, we say that we’re in the linear regime, and these calculations can be done by human hands, without the need for a numerical simulation.

This approximation is valid at early times, on large cosmic scales, and where density fluctuations remain small compared to the average overall cosmic density. This means that measuring the Universe on the largest cosmic scales should be a very strong, robust test of dark matter and our model of the Universe. It should come as no surprise that the predictions of dark matter, particularly on the scales of galaxy clusters and larger, are amazingly successful.

However, on the smaller cosmic scales — particularly on the scales of individual galaxies and smaller — that approximation is no longer any good. Once the density fluctuations in the Universe become large compared to the background density, you can no longer do the calculations by hand. Instead, you need numerical simulations to help you out as you transition from the linear to the non-linear regime.

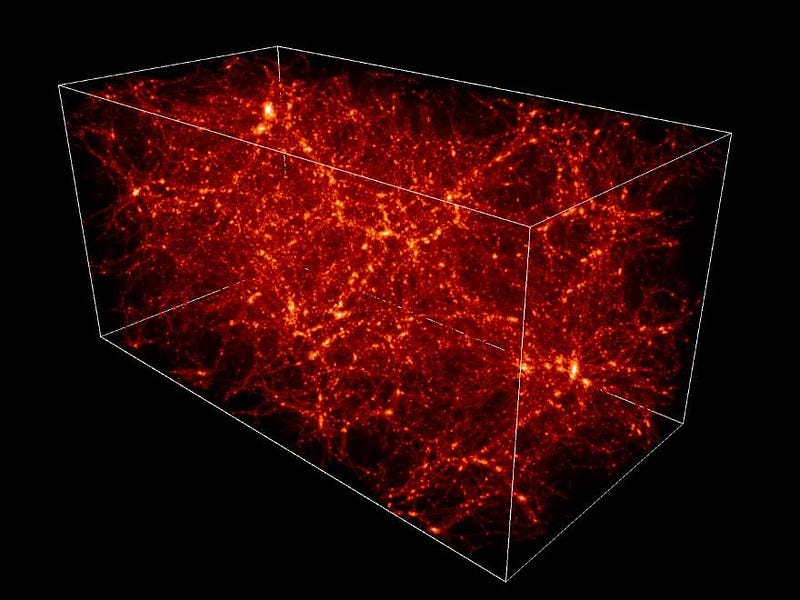

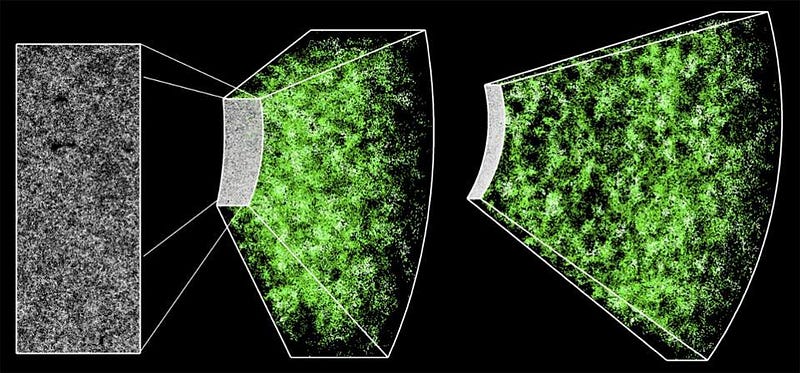

In the 1990s, the first simulations started to come out that went deep into the realm of non-linear structure formation. On cosmic scales, they enabled us to understand how structure formation would proceed on relatively small scales that would be affected by the temperature of dark matter: whether it was born moving quickly or slowly relative to the speed of light. From this information (and observations of small-scale structure, such as the absorption features by hydrogen gas clouds intercepted by quasars), we were able to determine that dark matter must be cold, not hot (and not warm), to reproduce the structures we see.

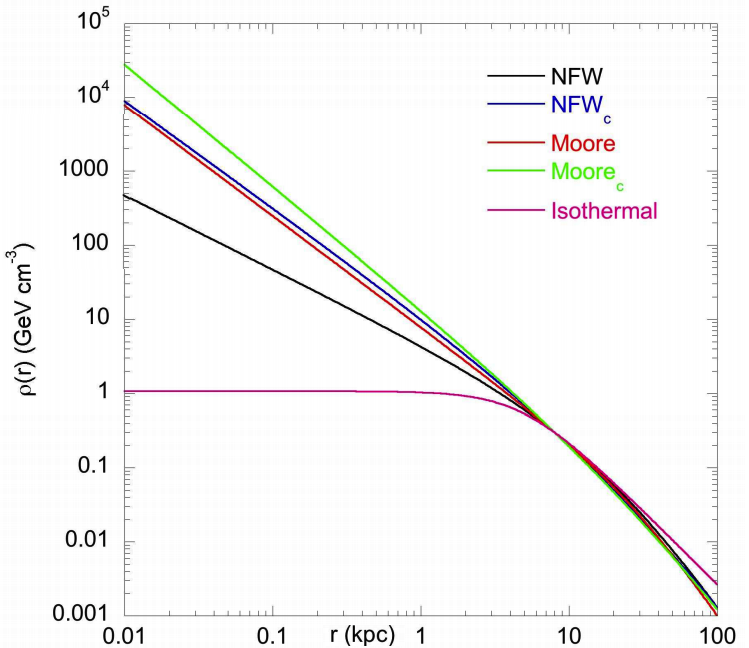

The 1990s also saw the first simulations of dark matter halos that form under the influence of gravity. The various simulations had a wide range of properties, but they all exhibited some common features, including:

- a density that reaches a maximum in the center,

- that falls off at a certain rate (as ρ ~ r^-1 to r^-1.5) until you reach a certain critical distance that depends on the total halo mass,

- and then that “turns over” to fall off at a different, steeper rate (as ρ ~ r^-3), until it falls below the average cosmic density.

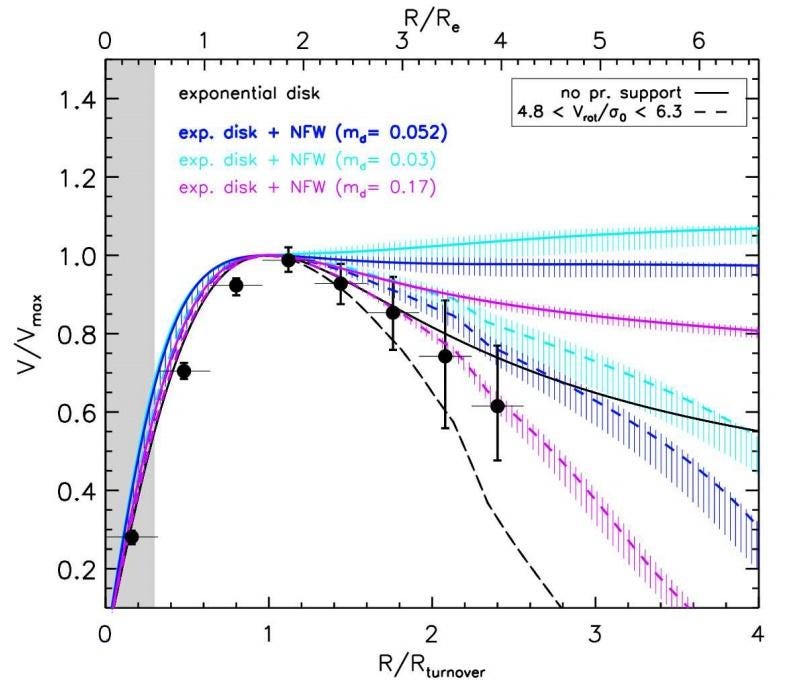

These simulations predict what are known as “cuspy halos,” because the density continues to rise in the innermost regions even beyond the turnover point, in galaxies of all sizes, including the smallest ones. However, the low-mass galaxies we observe don’t exhibit rotational motions (or velocity dispersions) that are consistent with these simulations; they are much better fit by “core-like halos,” or halos with a constant density in the innermost regions.

This problem, known as the core-cusp problem in cosmology, is one of the oldest and most controversial for dark matter. In theory, matter should fall into a gravitationally bound structure and undergo what’s known as violent relaxation, where a large number of interactions cause the heaviest-mass objects to fall towards the center (becoming more tightly bound) while the lower-mass ones get exiled to the outskirts (becoming more loosely bound) and can even get ejected entirely.

Since similar phenomena to the expectations of violent relaxation were seen in the simulations, and all the different simulations had these features, we assumed that they were representative of real physics. However, it’s also possible that they don’t represent real physics, but rather represent a numerical artifact inherent to the simulation itself.

You can think of this the same way you think of approximating a square wave (where the value of your curve periodically switches between +1 and -1, with no in-between values) by a series of sine wave curves: an approximation known as a Fourier series. As you add progressively greater numbers of terms with ever-increasing frequencies (and progressively smaller amplitudes), the approximation gets better and better. You might be tempted to think that if you added up an infinitely large number of terms, you’d get an arbitrarily good approximation, with vanishingly small errors.

Only, that’s not true at all. Do you notice how, even as you add more and more terms to your Fourier series, you still see a very large overshoot anytime you transition from a value of +1 to -1 or a value of -1 to +1? No matter how many terms you add, that overshoot will always be there. Not only that, but it doesn’t asymptote to 0 as you add more and more terms, but rather to a substantial value (around 18%) that never gets any smaller. That’s a numerical effect of the technique you use, not a real effect of the actual square wave.

Remarkably, a new paper by A.N. Baushev and S.V. Pilipenko, just published in Astronomy & Astrophysics, asserts that the central cusps seen in dark matter halos are themselves numerical artifacts of how our simulations deal with many-particle systems interacting in a small volume of space. In particular, the “core” of the halo that forms does so because of the specifics of the algorithm that approximates the gravitational force, not because of the actual effects of violent relaxation.

In other words, the dark matter densities we derive inside each halo from simulations may not actually have anything to do with the physics governing the Universe; instead, it may simply be a numerical artifact of the methods we’re using to simulate the halos themselves. As the authors themselves state,

“This result casts doubts on the universally adopted criteria of the simulation reliability in the halo center. Though we use a halo model, which is theoretically proved to be stationary and stable, a sort of numerical ’violent relaxation’ occurs. Its properties suggest that this effect is highly likely responsible for the central cusp formation in cosmological modelling of the large-scale structure, and then the ’core-cusp problem’ is no more than a technical problem of N-body simulations.” –Baushev and Pilipenko

Unsurprisingly, the only problems for dark matter in cosmology occur on cosmically small scales: far into the non-linear regime of evolution. For decades, contrarians opposed to dark matter have latched onto these small-scale problems, convinced that they’ll reveal the flaws inherent to dark matter and reveal a deeper truth.

If this new paper is correct, however, the only flaw is that cosmologists have taken one of the earliest simulation results — that dark matter forms halos with cusps at the center — and believed their conclusions prematurely. In science, it’s important to check your work and to have its results checked independently. But if everyone’s making the same error, these checks aren’t independent at all.

Disentangling whether these simulated results are due to the actual physics of dark matter or the numerical techniques we’ve chosen could put an end to the biggest debate over dark matter. If it’s due to actual physics after all, the core-cusp problem will remain a point of tension for dark matter models. But if it’s due to the technique we use to simulate these halos, one of cosmology’s biggest controversies could evaporate overnight.

Ethan Siegel is the author of Beyond the Galaxy and Treknology. You can pre-order his third book, currently in development: the Encyclopaedia Cosmologica.