Ask Ethan: What Could Solve The Cosmic Controversy Over The Expanding Universe?

Two independent techniques give precise but incompatible answers. Here’s how to resolve it.

If you didn’t know anything about the Universe beyond our own galaxy, there are two different pathways you could take to figure out how it was changing. You could measure the light from well-understood objects at a wide variety of distances, and deduce how the fabric of our Universe changes as the light travels through space before arriving at our eyes. Alternatively, you could identify an ancient signal from the Universe’s earliest stages, and measure its properties to learn about how spacetime changes over time. These two methods are robust, precise, and in conflict with one another. Luc Bourhis wants to know what the resolution might be, asking:

As you pointed out in several of your columns, the cosmic [distance] ladder and the study of CMBR gives incompatible values for the Hubble constant. What are the best explanations cosmologists have come with to reconcile them?

Let’s start by exploring the problem, and then seeing how we might resolve it.

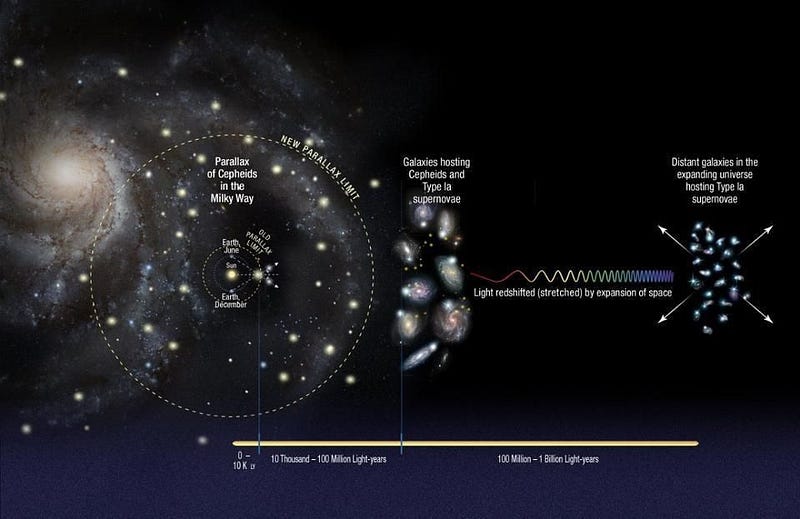

The story of the expanding Universe goes back nearly 100 years, to when Edwin Hubble first discovered individual stars of a specific type — Cepheid variable stars — within the spiral nebulae seen throughout the night sky. All at once, this demonstrated that these nebulae were individual galaxies, allowed us to calculate the distance to them, and by adding one additional piece of evidence, revealed that the Universe was expanding.

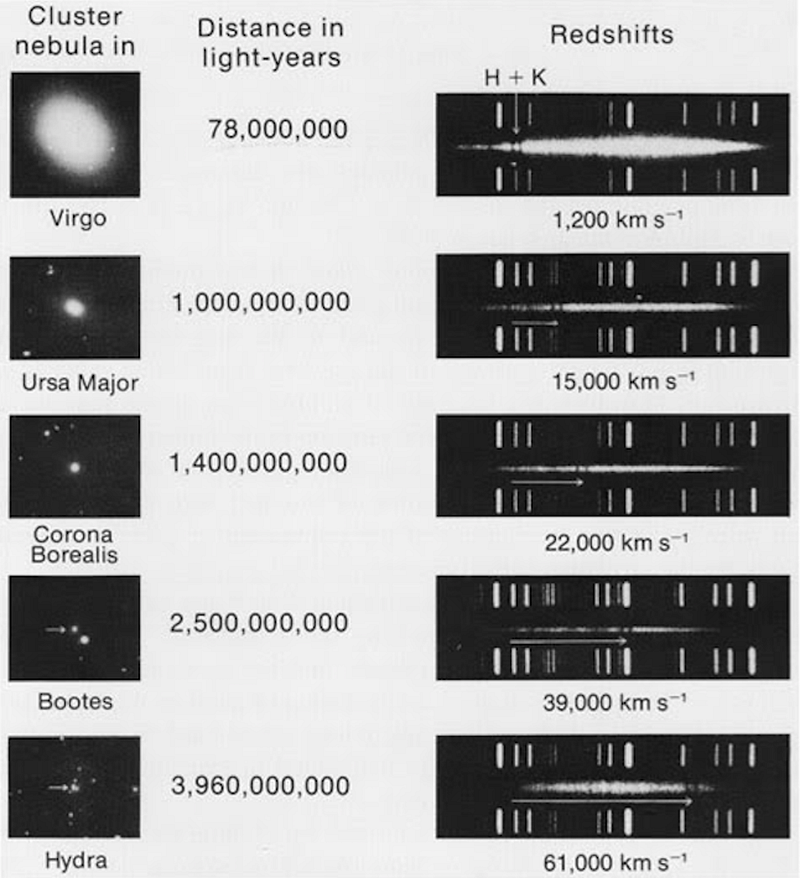

That additional evidence was discovered a decade prior by Vesto Slipher, who noticed that the spectral lines of these same spiral nebulae were severely redshifted on average. Either they were all moving away from us, or the space between us and them was expanding, just as Einstein’s theory of spacetime predicted. As more and better data came in, the conclusion became overwhelming: the Universe was expanding.

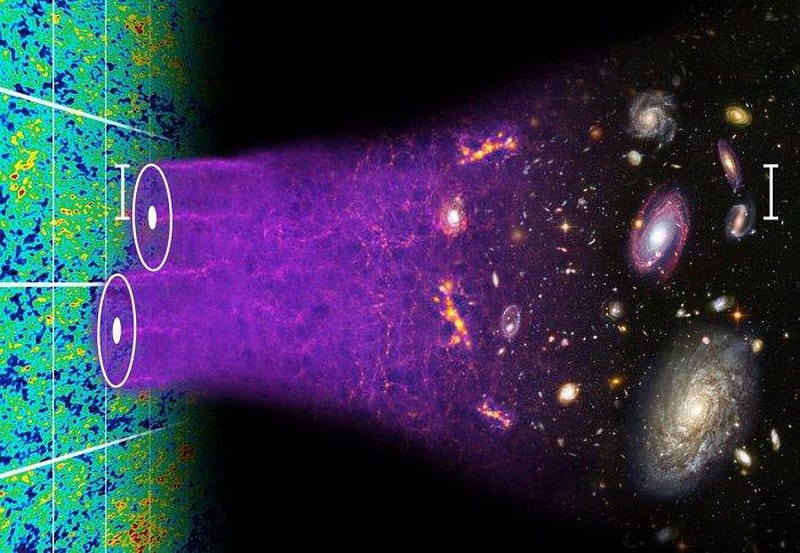

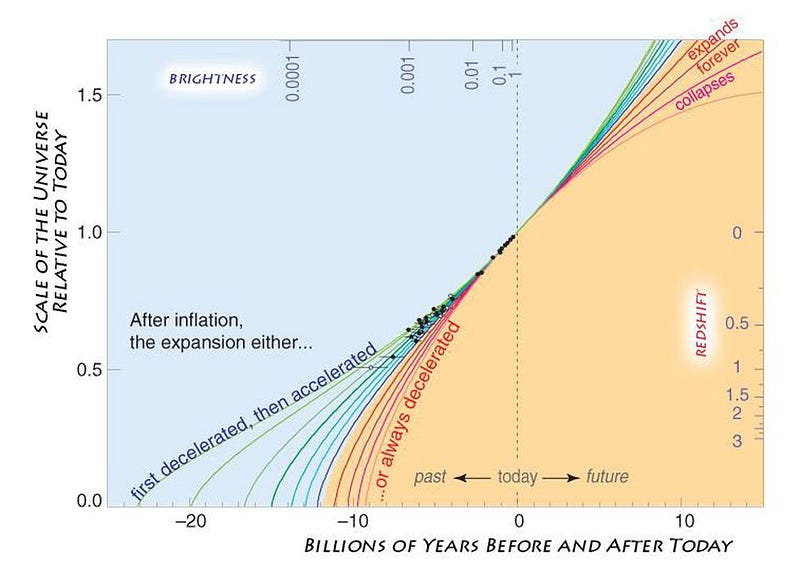

Once we accepted that the Universe was expanding, it became apparent that the Universe was smaller, hotter, and denser in the past. Light, from wherever it’s emitted, must travel through the expanding Universe in order to arrive at our eyes. When we measure the light we receive from a well-understood object, determining a distance to the objects we observe, we can also measure how much that light has redshifted.

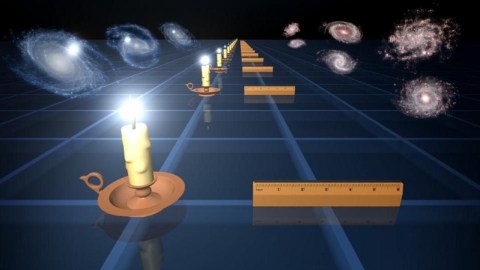

This distance-redshift relation allows us to construct the expansion history of the Universe, as well as measuring its present expansion rate. The distance ladder method was thus born. At present, there are perhaps a dozen different objects we understand well enough to use as distance indicators — or standard candles — to teach us how the Universe has expanded over its history. The different methods all agree, and yield a value of 73 km/s/Mpc, with an uncertainty of just 2–3%.

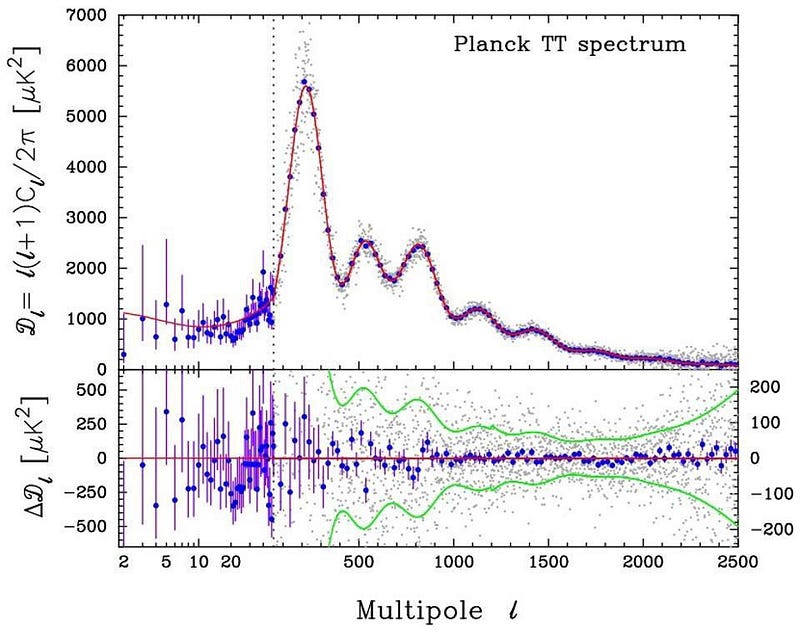

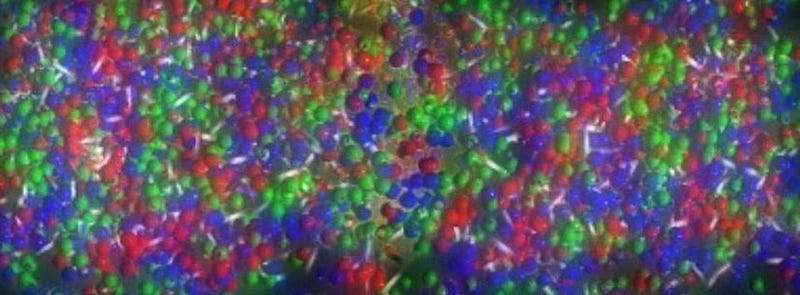

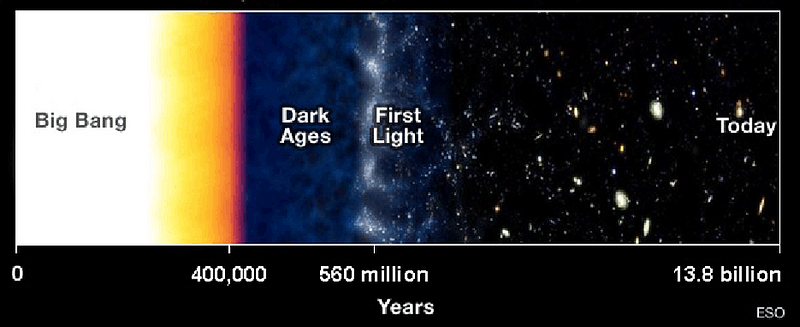

On the other hand, if we go all the way back to the earliest stages of the Big Bang, we know that the Universe contained not only normal matter and radiation, but a substantial amount of dark matter as well. While normal matter and radiation interact with one another through collisions and scattering interactions very frequently, the dark matter behaves differently, as its cross-section is effectively zero.

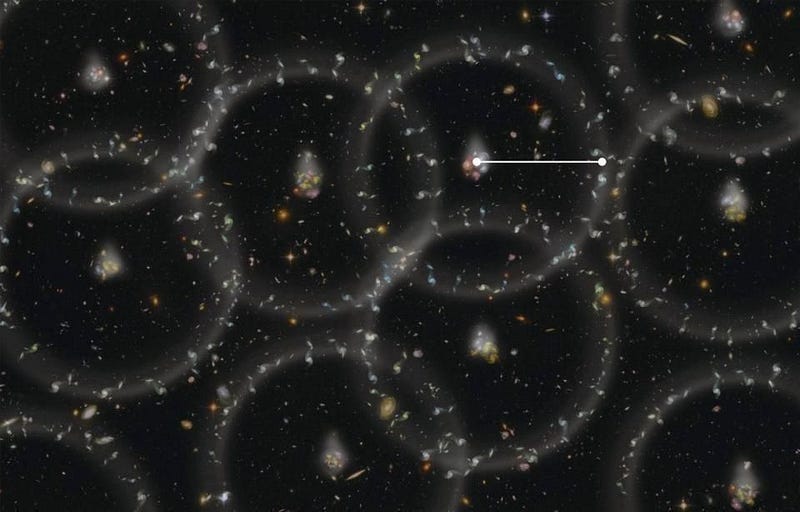

This leads to a fascinating consequence: the normal matter tries to gravitationally collapse, but the photons push it back out, whereas the dark matter has no ability to be pushed by that radiation pressure. The result is a series of peaks-and-valleys in the large-scale structure that arises on cosmic scales from these oscillations — known as baryon acoustic oscillations (BAO) — but the dark matter is smoothly distributed atop it.

These fluctuations show up on a variety of angular scales in the cosmic microwave background (CMB), and also leave an imprint in the clustering of galaxies that occurs later on. These relic signals, originating from the earliest times, allow us to reconstruct how quickly the Universe is expanding, among other properties. From the CMB and BAO both, we get a very different value: 67 km/s/Mpc, with an uncertainty of only 1%.

Because of the fact that there are many parameters we don’t know intrinsically about the Universe — such as the age of the Universe, the normal matter density, the dark matter density, or the dark energy density — we have to allow them all to vary together when constructing our best-fit models of the Universe. When we do, a number of possible pictures arise, but one thing remains unambiguously true: the distance ladder and early relic methods are mutually incompatible.

The possibilities for why these discrepancies are occurring are threefold:

- The “early relics” group is mistaken. There’s a fundamental error in their approach to this problem, and it’s biasing their results towards unrealistically low values.

- The “distance ladder” group is mistaken. There’s some sort of systematic error in their approach, biasing their results towards incorrect, high values.

- Both groups are correct, and there is some sort of new physics at play responsible for the two groups obtaining different results.

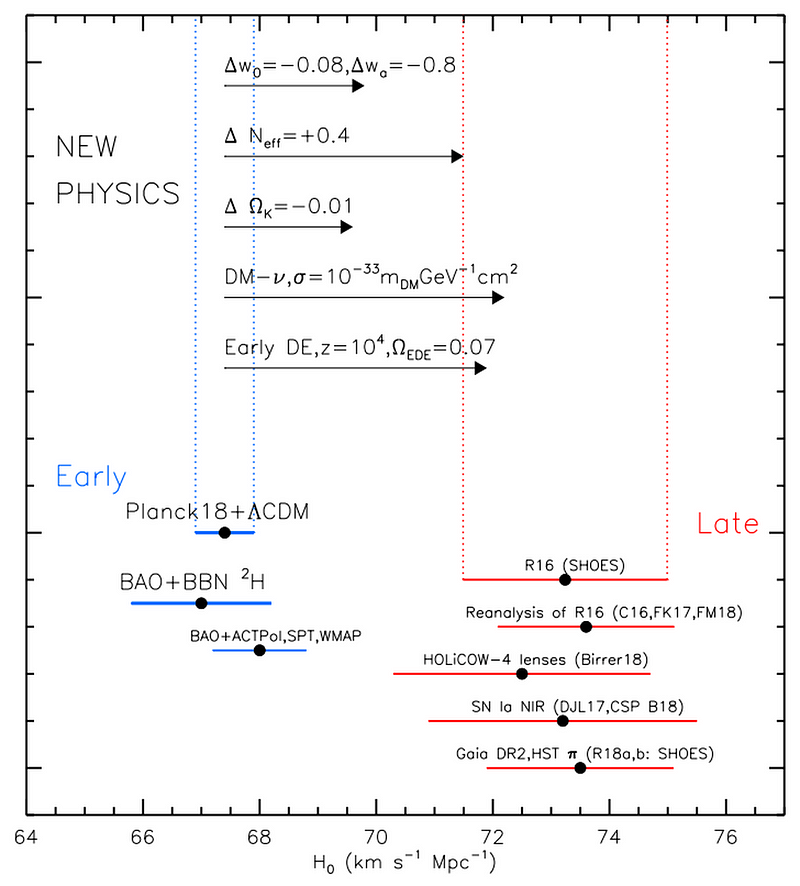

There are numerous very good reasons indicating that the results of both groups ought to be believed. If that’s the case, there has to be some sort of new physics involved to explain what we’re seeing. Not everything can do it: living in a local cosmic void is disfavored, as is adding in a few percentage points of spatial curvature. Instead, here are the five best explanations cosmologists are considering right now.

1.) Dark energy gets more powerfully negative over time. To the limits of our best observations, dark energy appears to be consistent with a cosmological constant: a form of energy inherent to space itself. As the Universe expands, more space gets created, and since the dark energy density remains constant, the total amount of dark energy contained within our Universe increases along with the Universe’s volume.

But this is not mandatory. Dark energy could either strengthen or weaken over time. If it’s truly a cosmological constant, there’s an absolute relationship between its energy density (ρ) and the negative pressure (p) it exerts on the Universe: p = -ρ. But there is some wiggle room, observationally: the pressure could be anywhere from -0.92ρ to about -1.18ρ. If the pressure gets more negative over time, this could yield a smaller value with the early relics method and a larger value with the distance ladder method. WFIRST should measure this relationship between ρ and p down to about the 1% level, which should constrain, rule out, or discover the truth of this possibility.

2.) Keeping neutrinos strongly coupled to matter and radiation for longer than expected. Conventionally, neutrinos interact with the other forms of matter and radiation in the Universe only until the Universe cools to a temperature of around 10 billion K. At temperatures cooler than this, their interaction cross-section is too low to be important. This is expected to occur just a second after the Big Bang begins.

But if the neutrinos stay strongly-coupled to the matter and radiation for longer — for thousands of years in the early Universe instead of just ~1 second — this could accommodate a Universe with a faster expansion rate than the early relics teams normally consider. This could arise if there’s an additional self-interaction between neutrinos from what we presently think, which is compelling considering the Standard Model alone cannot explain the full suite of neutrino observations. Further neutrino studies at relatively low and intermediate energies could probe this scenario.

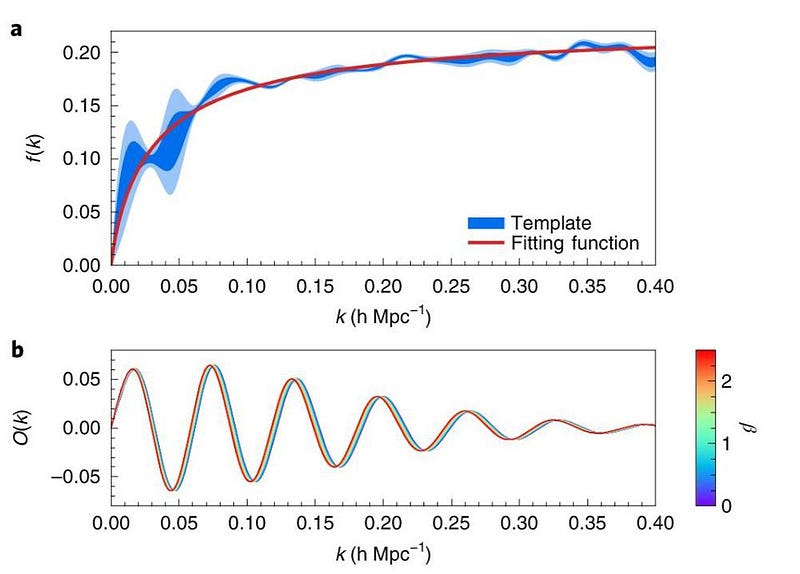

3.) The size of the cosmic sound horizon is different than what the early relics team has concluded. When we talk about photons, normal matter, and dark matter, there is a characteristic distance scale set by their interactions, the size/age of the Universe, and the rate at which signals can travel through the early Universe. Those acoustic peaks and valleys we see in the CMB and in the BAO data, for example, are manifestations of that sound horizon.

But what if we’ve miscalculated or incorrectly determined the size of that horizon? If you calibrate the sound horizon with distance ladder methods, such as Type Ia supernovae, you obtain a sound horizon that’s significantly larger than the one you get if you calibrate the sound horizon traditionally: with CMB data. If the sound horizon actually evolves from the very early Universe to the present day, this could fully explain the discrepancy. Fortunately, next-generation CMB surveys, like the proposed SPT-3G, should be able to test whether such changes have occurred in our Universe’s past.

4.) Dark matter and neutrinos could interact with one another. Dark matter, according to every indication we have, only interacts gravitationally: it doesn’t collide with, annihilate with, or experience forces exerted by any other forms of matter or radiation. But in truth, we only have limits on possible interactions; we haven’t ruled them out entirely.

What if dark matter and neutrinos interact and scatter off of one another? If the dark matter is very massive, an interaction between a very heavy thing (like a dark matter particle) and a very light particle (like a neutrino) could cause the light particles to speed up, gaining kinetic energy. This would function as a type of energy injection in the Universe. Depending on when and how it occurs, could cause a discrepancy between early and late measurements of the expansion rate, perhaps even enough to fully account for the differing, technique-dependent measurements.

5.) Some significant amount of dark energy existed not only at late (modern) times, but also early ones. If dark energy appears in the early Universe (at the level of a few percent) but then decays away prior to the CMB measurements, this could fully explain the tension between the two methods of measuring the expansion rate of the Universe. Again, future improved measurements of both the CMB and of the large-scale structure of the Universe could help provide indications if this scenario describes our Universe.

Of course, this isn’t an exhaustive list; one could always choose any number of classes of new physics, from inflationary add-ons to modifying Einstein’s theory of General Relativity, to potentially explain this controversy. But in the absence of compelling observational evidence for one particular scenario, we have to look at the ideas that could be feasibly tested in the near-term future.

The immediate problem with most solutions you can concoct to this puzzle is that the data from each of the two main techniques — the distance ladder technique and the early relics technique — already rule out almost all of them. If the five scenarios for new physics you just read seem like an example of desperate theorizing, there’s a good reason for that: unless one of the two techniques has a hitherto-undiscovered fundamental flaw, some type of new physics must be at play.

Based on the improved observations that are coming in, as well as novel scientific instruments that are presently being designed and built, we can fully expect the tension in these two measurements to reach the “gold standard” 5-sigma significance level within a decade. We’ll all keep looking for errors and uncertainties, but it’s time to seriously consider the fantastic: maybe this really is an omen that there’s more to the Universe than we presently realize.

Ethan Siegel is the author of Beyond the Galaxy and Treknology. You can pre-order his third book, currently in development: the Encyclopaedia Cosmologica.