How many blue dots do you see? The science of why we overinflate our problems.

Any one of us could easily compile a list of things that are wrong in our world. These are real problems and they do matter. Still, looking at the data objectively, there are lots of things that are getting better. Real strides have been made in longevity and in reducing famine, violence, and the release of particulate matter into the atmosphere, among other things, as Steven Pinker explains in his eye-opening TED2018 talk. So how come we feel like we never get anywhere, as if for every step forward there are two steps back? Part of it is that progress is uneven: Things get better for some as they get worse for others. Part of it is that bad news is more compelling and thus more often reported than good news. But part of it is due to a common psychological phenomenon called “prevalence-induced concept change.” There’s a new study from Harvard that documents what it is and how it works.

Meet prevalence-induced concept change

Co-author Daniel Gilbert explains to The Harvard Gazette that, “as we reduce the prevalence of a problem, such as discrimination, for example, we judge each new behavior in the improved context that we have created.” We actually expand our definition of the problem to encompass more subtle variants. As Gilbert says, “When problems become rare, we count more things as problems. Our studies suggest that when the world gets better, we become harsher critics of it, and this can cause us to mistakenly conclude that it hasn’t actually gotten better at all. Progress, it seems, tends to mask itself.”

It’s not strictly a flaw in the way we think. As the study says, “When yellow bananas become less prevalent, a shopper’s concept of ‘ripe’ should expand to include speckled ones.” On the other hand, “when violent crimes become less prevalent, a police officer’s concept of ‘assault’ should not expand to include jaywalking.” (Our emphasis.)

The study’s experiments

Gilbert and co-author David Levari devised three series of tests. They presented study participants with a range of stimuli and asked them to identify those that were examples of a particular thing.

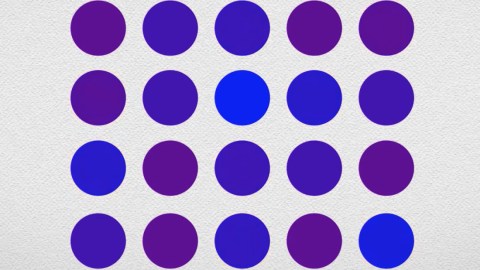

Blue dot or purple dot?

The subjects were shown a series of 1000 dots and asked to identify the blue one. After 200 trials, the researchers began reducing the number of blue dots for another 200 trials. The subjects considered more and more dots to be blue as their actual numbers decreased. A second series was run, this time warning subjects that the reduction of blue dots likely make them qualify more blueish purple dots as simply blue. In spite of this warning, the effect persisted.

Do I scare you?

Looking to see if more complex images elicited the same response, the researchers ran a series of trials with faces. The results were essentially the same as with the “blue” dots: The fewer threatening faces were presented, the more subjects’ definitions of what constituted a threat expanded to include faces that had previously been seen as benign.

Is this research proposal ethical?

Subjects of a third series of tests were asked to imagine themselves members of an institutional review board charged with assessing the ethical issues embodied in a collection of research proposals. As with the dots and faces, the fewer the number of clearly unethical proposals they were shown, the more their view of what was unethical continued to expand.

Where prevalence-induced concept change is helpful

In some situations, this phenomenon is appropriate and helpful. Gilbert cites the example of ER personnel performing triage on incoming patients. “If the ER is full of gunshot victims and someone comes in with a broken arm, the doctor will tell that person to wait.” In this situation, what qualifies as an emergency is someone who’s been shot. “But imagine one Sunday where there are no gunshot victims. Should that doctor hold her definition of ‘needing immediate attention’ constant [that is, someone who’s been shot] and tell the guy with the broken arm to wait anyway? Of course not. She should change her definition based on this new context.” In this context, it makes perfect sense to promote the urgency of the lesser injury.

Where it’s decidedly not

Gilbert notes, on the other hand, that you wouldn’t want a radiologist, having eliminated everything he or she considered to be a tumor, to keep radiating smaller and smaller tissue imperfections against the now-tumor-free context. It’s also a problem for artists of all sorts, who can become maddeningly obsessed with ever-tinier imperfections once their creation is mostly complete. And of, course, it’s hard to solve entrenched, long-term problems without becoming demoralized about all that remains to be done—a fatigue that could be at least partially overcome with an appreciation for what’s been achieved.

What to do with this information

No one is claiming that things in the world are always going to be getting better, and certainly, progress can be frustratingly uneven. Pinker warns that anyone pointing out the progress that has been made may find themselves under attack for believing progress is a continual, inexorable forward march. It’s obviously not.

The Harvard study’s aim is to help good people not fall into this common psychological trap. The authors are concerned especially with organizations attempting to do good against heavy odds that make forward movement difficult and slow, concluding, “well-meaning agents may sometimes fail to recognize the success of their own efforts, simply because they view each new instance in the decreasingly problematic context that they themselves have brought about. Although modern societies have made extraordinary progress in solving a wide range of social problems, from poverty and illiteracy to violence and infant mortality, the majority of people believe that the world is getting worse. The fact that concepts grow larger when their instances grow smaller may be one source of that pessimism.”