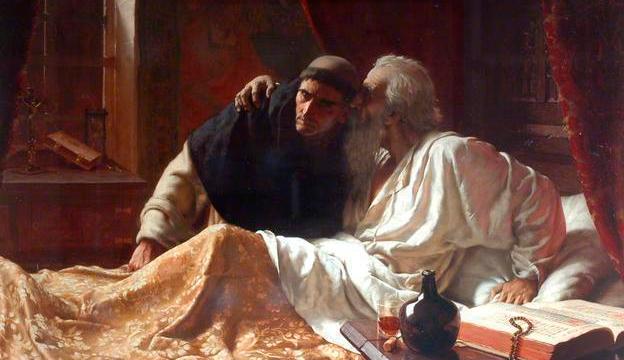

Why Aristotle didn’t invent modern science

Credit: Peter Macdiarmid via Getty Images

- Modern science requires scrutinizing the tiniest of details and an almost irrational dedication to empirical observation.

- Many scientists believe that theories should be “beautiful,” but such argumentation is forbidden in modern science.

- Neglecting beauty would be a step too far for Aristotle.

Modern science has done astounding things: sending probes to Pluto, discerning the nature of light, vaccinating the globe. Its power to plumb the world’s inner workings, many scientists and philosophers of science would say, hinges on its exacting attention to empirical evidence. The ethos guiding scientific inquiry might be formulated so: “Credit must be given to theories only if what they affirm agrees with the observed facts.”

Those are the words of the Greek philosopher Aristotle, writing in the fourth century BCE. Why, then, was it only during the Scientific Revolution of the 17th century, two thousand years later, that science came into its own? Why wasn’t it Aristotle who invented modern science?

The answer is, first, that modern science attends to a different kind of observable fact than the sort that guided Aristotle. Second, modern science attends with an intensity — indeed an unreasonable narrow-mindedness — that Aristotle would have found to be more than a little unhinged. Let’s explore those two ideas in turn.

Excruciating minutiae

In 1915, Albert Einstein proposed a new theory of gravitation — the general theory of relativity. It told a story radically different from the prevailing Newtonian theory; gravity, according to Einstein, was not a force but rather the manifestation of matter’s propensity to travel along the straightest possible path through twisted spacetime. Relativity revised the notion of gravitation on the grandest conceptual scale, but to test it required the scrutiny of minutiae.

When Arthur Eddington sought experimental evidence for the theory by measuring gravity’s propensity to bend starlight, he photographed the same star field both in the night sky and then in close proximity to the eclipsed sun, looking for a slight displacement in the positions of the stars that would reveal the degree to which the sun’s mass deflected their light. The change in position was on the order of a fraction of a millimeter on his photographic plates. In that minuscule discrepancy lay the reason to accept a wholly new vision of the nature of the forces that shape galaxies.

Aristotle would not have thought to look in these places, at these diminutive magnitudes. Even the pre-scientific thinkers who believed that the behavior of things was determined by their microscopic structure did not believe it was possible for humans to discern that structure. When they sought a match between their ideas and the observed facts, they meant the facts that any person might readily encounter in the world around them: the gross motions of cannonballs and comets; the overall attunement of animals and their environs; the tastes, smells, and sounds that force themselves on our sensibilities without asking our permission. They were looking in the wrong place. The clues to the deepest truths have turned out to be deeply hidden.

Modern science attends with an intensity — indeed an unreasonable narrow-mindedness — that Aristotle would have found to be more than a little unhinged.

Even in those cases where the telling evidence is visible to the unassisted eye, the effort required to gather what’s needed can be monumental. Charles Darwin spent nearly five years sailing around the world on a 90-foot-long ship, the Beagle, recording the sights and sounds that would prompt his theory of evolution by natural selection. Following his famous footsteps, the Princeton biologists Rosemary and Peter Grant have spent nearly 50 years visiting the tiny Galápagos island of Daphne Major every summer observing the local finch populations. In so doing, they witnessed the creation of a new species.

Similarly excruciating demands are made by many other scientific projects, each consumed with the hunt for subtle detail. The LIGO experiment to measure gravitational waves commenced in the 1970s, was nearly closed down in the 1980s, began operating its detectors only in 2002, and then for well over a decade found nothing. Upgraded machinery revealed the waves at last in 2015. The scientists who had spent their entire careers working on LIGO were by then retired from their long-time university positions.

The “iron rule” of modern science

What pushes scientists to undertake these titanic efforts? That question brings me to the second way in which modern science’s attitude to evidence differs from Aristotle’s. There is something about the institutions of science, as the philosopher and historian Thomas Kuhn wrote, that “forces scientists to investigate some part of nature in a detail and depth that would otherwise be unimaginable”. That something is an “iron rule” to the effect that, when publishing arguments for or against a hypothesis, only empirical evidence counts. That is to say, the only kind of argument that is allowed in science’s official organs of communication is one that assesses a theory according to its ability to predict or explain the observable facts.

Aristotle said that evidence counts, but he did not say that only evidence counts. To get a feel for the significance of this additional word, one of modern science’s most significant ingredients, let me return to Eddington’s attempt to test Einstein’s theory by photographing stars during a solar eclipse.

Eddington was himself as much of a theoretical as an experimental physicist. He was struck by the mathematical beauty of Einstein’s theory, which he took as a sign of its superiority to the old, Newtonian physics. He might have devoted himself to promoting relativity theory on these grounds, proselytizing its aesthetic merits with his elegant writing style and his many scientific connections. But in scientific argument, only empirical evidence counts. To appeal to a theory’s beauty is to transgress this iron rule.

If Eddington was to advocate for Einstein, he would have to do so with measurements. Consequently, he found himself on a months-long expedition to Africa, where he and his collaborators sweated over their equipment day after day while praying for clear skies. In short, the iron rule forced Eddington to put beauty aside and to get on the boat. That is how scientists are pushed to hunt down the fine-grained, often elusive observations that endow science with its extraordinary power.

Irrational but effective

Though it may be a resounding success, there is something very peculiar about the iron rule. For Eddington and many other physicists, beauty is an important, even a crucial, consideration in determining the truth: “We would not accept any theory as final unless it were beautiful,” wrote the Nobelist Steven Weinberg.

At the same time, the iron rule stipulates that beauty may play no part in scientific argument, or at least, in official, written scientific argument. The rule tells scientists, then, to ignore what they take to be an immensely valuable criterion for assessing theories. That seems oddly, even irrationally, narrow-minded. It turns out, then, that science’s knowledge-making prowess is owed in great part to a kind of deliberate blindness, an unreasonable insistence that inquirers into nature consider nothing but observed fact.

Michael Strevens writes about science, understanding, complexity, and the nature of thought, and teaches philosophy at New York University. His most recent book, The Knowledge Machine (Liveright, 2020), sets out to explain how science works so well and why it took so long to get it right.