As generative AI transforms society, leaders must model responsible use by fostering collaboration, setting realistic guidelines, encouraging exploration, creating a cooperative culture, ensuring data privacy, and demonstrating effective AI practices to guide their teams.

As generative AI evolves, ethical concerns about bias, privacy, and copyright arise, prompting the need for awareness and responsible usage, as discussed by Professor Ethan Mollick in his video lesson on navigating these challenges in professional contexts.

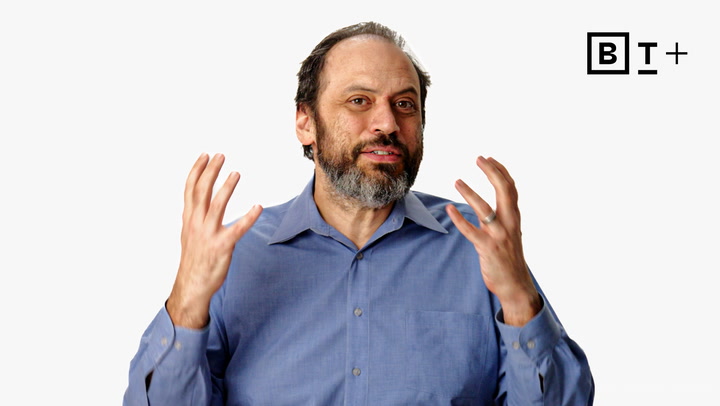

In this video lesson, Professor Ethan Mollick discusses navigating the unpredictable nature of large language models, emphasizing the importance of understanding their limitations, managing potential misinformation, and continuously updating one’s knowledge of AI’s evolving capabilities.

Professor Ethan Mollick compares centaurs and cyborgs to illustrate how to effectively integrate generative AI into work, suggesting a clear division of tasks in the Centaur Model or a blended approach in the Cyborg Model to enhance performance and innovation.

In a video lesson, Professor Ethan Mollick outlines how to effectively integrate generative AI into your workflow, emphasizing the importance of human oversight, contextual guidance, and the proactive exploration of AI’s capabilities while remaining aware of its limitations.

As generative AI sparks diverse opinions on its implications for humanity, Ethan Mollick suggests we shift our focus to understanding AI’s capabilities and potential applications, emphasizing experimentation to enhance our skills and foster a responsible partnership with technology.

Paradigm shifts, like those introduced by Copernicus, Newton, and Darwin, also affect economies, with innovation consultant Rita McGrath highlighting the rise of stakeholder capitalism and consumer protection concerns, urging businesses to adapt to these emerging signals for long-term success.

Businesses must recognize their profound responsibilities to society when engaging with AI, as its influence on privacy and decision-making can reshape industries and everyday life, necessitating a comprehensive understanding of various fields to anticipate potential consequences.

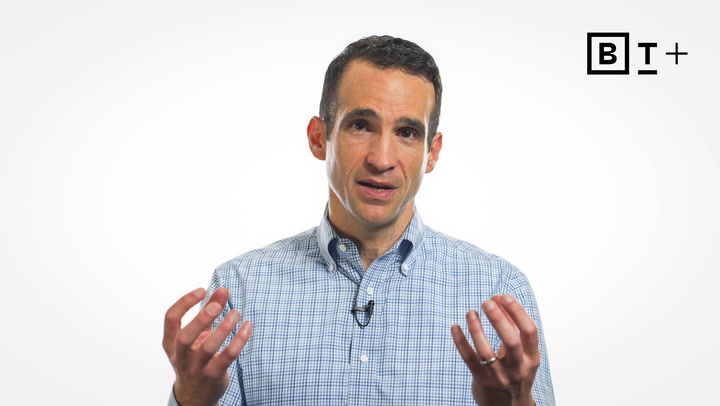

In a video lesson, Professor Yuval Harari emphasizes the need for safeguards against AI’s potential to undermine public trust and democratic dialogue, advocating for transparency in AI identities and corporate accountability to combat misinformation while preserving genuine human expression.

Professor Yuval Harari discusses how AI’s relentless, “always-on” nature contrasts with human needs for rest, potentially disrupting our daily rhythms, privacy, and decision-making processes as power shifts from humans to machines.

A recent study reveals that adults engage with their phones every ten minutes, prompting author Nir Eyal to caution against manipulative app designs and suggest a “regret test” to evaluate their ethical implications on user habits.