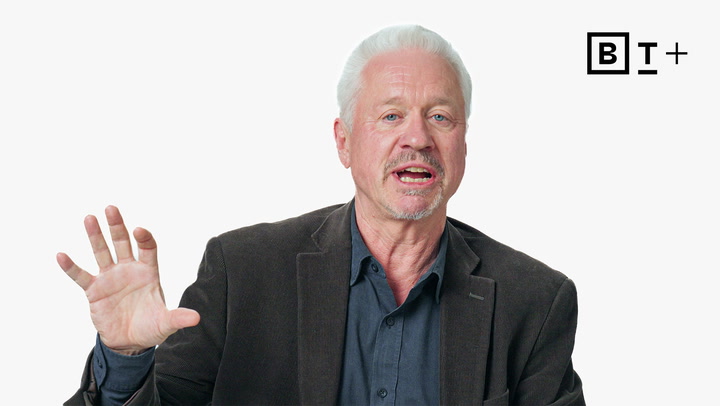

Professor Michael Watkins emphasizes that while AI can drive business value, leaders must prioritize ethical oversight, employee empathy, and proactive measures to mitigate risks like bias and job displacement in an AI-driven workplace.

AI is a powerful collaborator that requires human oversight, clear roles, and governance to ensure responsible use; Professor Michael Watkins outlines five principles for designing effective human-AI hybrid systems that adapt and improve while maintaining ethical standards.

AI has quietly evolved over decades, transitioning from background tasks to advanced capabilities like content creation and decision-making, and understanding its progression is crucial for adapting to the new workplace dynamics it is shaping, as explored by Professor Michael Watkins in this video lesson.

Astronauts like Chris Hadfield and Scott Parazynski exemplify risk mitigation, demonstrating that their contingency planning skills are applicable to various challenges on Earth, from budgeting to managing Fortune 500 companies.

FutureThink CEO Lisa Bodell emphasizes that evaluating risks and clearly communicating criteria for smart versus stupid risks empowers decision-making, urging organizations to define essential information needed to pursue opportunities while establishing clear boundaries for acceptable risk.

Professor Michael Watkins emphasizes that organizations should be analyzed by focusing on key components—strategy, structure, systems, talent, incentives, and culture—to identify interdependencies and drive improvement, similar to how one would examine an airplane engine by its essential parts.

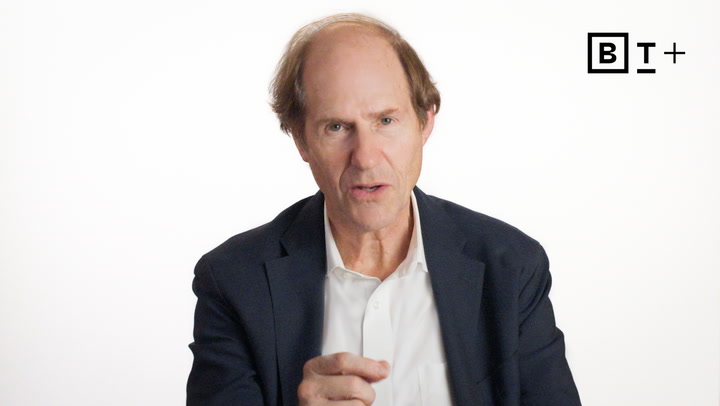

In a video lesson, Professor Cass Sunstein discusses three types of designers—manipulative, naive, and human-centered—highlighting how the latter prioritizes user experience by minimizing “sludge” and fostering customer satisfaction.

The “fail fast” mantra, while popular among entrepreneurs, can lead to unpreparedness for success, as it often distracts from planning for positive outcomes and neglects the realities faced by those without safety nets, emphasizing the need for strategic preparation for both failure and success.

In a video lesson, Professor Yuval Harari emphasizes that, like children learning to walk, AI development requires self-correcting mechanisms and collaborative efforts among institutions to effectively manage risks and address potential dangers as they arise.

Professor Yuval Harari discusses how AI’s relentless, “always-on” nature contrasts with human needs for rest, potentially disrupting our daily rhythms, privacy, and decision-making processes as power shifts from humans to machines.

As AI rapidly transforms our reality and reshapes engagement with information, Professor Yuval Noah Harari urges us to pause and critically consider the implications of coexisting with non-human intelligence, emphasizing the need for responsible leadership and safeguarding our humanity.